# if you dont have these packages installed yet, please use the install.packages("package_name") command.

library(tidyverse) # needed for data manipulation and plotting

library(rstan)

library(brms)

library(psych) #to get some extended summary statistics

library(bayesplot) # needed for plotting

library(ggmcmc)

library(mcmcplots)

library(tidybayes)

library(invgamma)WAMBS R Tutorial (using brms)

In this tutorial you follow the steps of the When-to-Worry-and-How-to-Avoid-the-Misuse-of-Bayesian-Statistics - checklist (the WAMBS-checklist).

WAMBS checklist

When to worry, and how to Avoid the Misuse of Bayesian Statistics

To be checked before estimating the model

- Do you understand the priors?

To be checked after estimation but before inspecting model results

- Does the trace-plot exhibit convergence?

- Does convergence remain after doubling the number of iterations?

- Does the posterior distribution histogram have enough information?

- Do the chains exhibit a strong degree of autocorrelation?

- Do the posterior distributions and posterior predictions make substantive sense?

Understanding the exact influence of the priors

- Do different specification of the multivariate variance priors influence the results?

- Is there a notable effect of the prior when compared with non-informative priors?

- Are the results stable from a sensitivity analysis?

- Is the Bayesian way of interpreting and reporting model results used?

Example Data

The data we be use for this exercise is based on a study about predicting PhD-delays (Van de Schoot, Yerkes, Mouw and Sonneveld 2013). The data can be downloaded here. Among many other questions, the researchers asked the Ph.D. recipients how long it took them to finish their Ph.D. thesis (n=333). It appeared that Ph.D. recipients took an average of 59.8 months (five years and four months) to complete their Ph.D. trajectory. The variable B3_difference_extra measures the difference between planned and actual project time in months (mean=9.96, minimum=-31, maximum=91, sd=14.43). For more information on the sample, instruments, methodology and research context we refer the interested reader to the paper.

For the current exercise we are interested in the question whether age (M = 30.7, SD = 4.48, min-max = 26-69) of the Ph.D. recipients is related to a delay in their project.

The relation between completion time and age is expected to be non-linear. This might be due to that at a certain point in your life (i.e., mid thirties), family life takes up more of your time than when you are in your twenties or when you are older.

So, in our model the gap (B3_difference_extra) is the dependent variable and age (E22_Age) and age\(^2\)(E22_Age_Squared ) are the predictors. The data can be found in the file phd-delays.csv .

Question: Write down the null and alternative hypotheses that represent this question. Which hypothesis do you deem more likely?

Click to show result

\(H_0:\) \(age\) is not related to a delay in the PhD projects.

\(H_1:\) \(age\) is related to a delay in the PhD projects.

\(H_0:\) \(age^2\) is not related to a delay in the PhD projects.

\(H_1:\) \(age^2\) is related to a delay in the PhD projects.

Preparation - Importing and Exploring Data

note. If you are getting the error: Error: .onLoad failed in loadNamespace() for ‘dbplyr’, details: call: setClass(cl, contains = c(prevClass, “VIRTUAL”), where = where) error: error in contained classes (“character”) for class “ident”; class definition removed from ‘dbplyr’ the brms package is loaded before the tidyverse package. Please restart R and load them in the order, tidyverse first brms second.

You can find the data in the file phd-delays.csv , which contains all variables that you need for this analysis. Although it is a .csv-file, you can directly load it into R using the following syntax:

#read in data

dataPHD <- read.csv2(file="phd-delays.csv")

colnames(dataPHD) <- c("diff", "child", "gender","age","age2")Alternatively, you can directly download them from GitHub into your R work space using the following command:

dataPHD <- read.csv2(file="https://raw.githubusercontent.com/UtrechtUniversity/BayesianEstimation/main/content/tuesday/phd-delays.csv")

colnames(dataPHD) <- c("diff", "child", "gender","age","age2")GitHub is a platform that allows researchers and developers to share code, software and research and to collaborate on projects (see https://github.com/)

Once you loaded in your data, it is advisable to check whether your data import worked well. Therefore, first have a look at the summary statistics of your data. you can do this by using the describe() function.

Question: Have all your data been loaded in correctly? That is, do all data points substantively make sense? If you are unsure, go back to the .csv-file to inspect the raw data.

describe(dataPHD) vars n mean sd median trimmed mad min max range skew

diff 1 333 9.97 14.43 5 6.91 7.41 -31 91 122 2.21

child 2 333 0.18 0.38 0 0.10 0.00 0 1 1 1.66

gender 3 333 0.52 0.50 1 0.52 0.00 0 1 1 -0.08

age 4 333 31.68 6.86 30 30.39 2.97 26 80 54 4.45

age2 5 333 1050.22 656.39 900 928.29 171.98 676 6400 5724 6.03

kurtosis se

diff 5.92 0.79

child 0.75 0.02

gender -2.00 0.03

age 24.99 0.38

age2 42.21 35.97The descriptive statistics make sense:

diff: Mean (9.97), SE (0.79)

age: Mean (31.68), SE (0.38)

age2: Mean (1050.22), SE (35.97)

Step 1: Do you understand the priors?

1.Do you understand the priors?

Before actually looking at the data we first need to think about the prior distributions and hyperparameters for our model. For the current model, there are four priors:

- the intercept

- the two regression parameters (\(\beta_1\) for the relation with AGE and \(\beta_2\) for the relation with AGE2)

- the residual variance (\(\in\))

We first need to determine which distribution to use for the priors. Let's use for the

- intercept a normal prior with \(\mathcal{N}(\mu_0, \sigma^{2}_{0})\), where \(\mu_0\) is the prior mean of the distribution and \(\sigma^{2}_{0}\) is the variance parameter

- \(\beta_1\) a normal prior with \(\mathcal{N}(\mu_1, \sigma^{2}_{1})\)

- \(\beta_2\) a normal prior with \(\mathcal{N}(\mu_2, \sigma^{2}_{2})\)

- \(\in\) an Inverse Gamma distribution with \(IG(\kappa_0,\theta_0)\), where \(\kappa_0\) is the shape parameter of the distribution and \(\theta_0\) the rate parameter

Next, we need to specify actual values for the hyperparameters of the prior distributions. Let's say we found a previous study and based on this study the following hyperparameters can be specified:

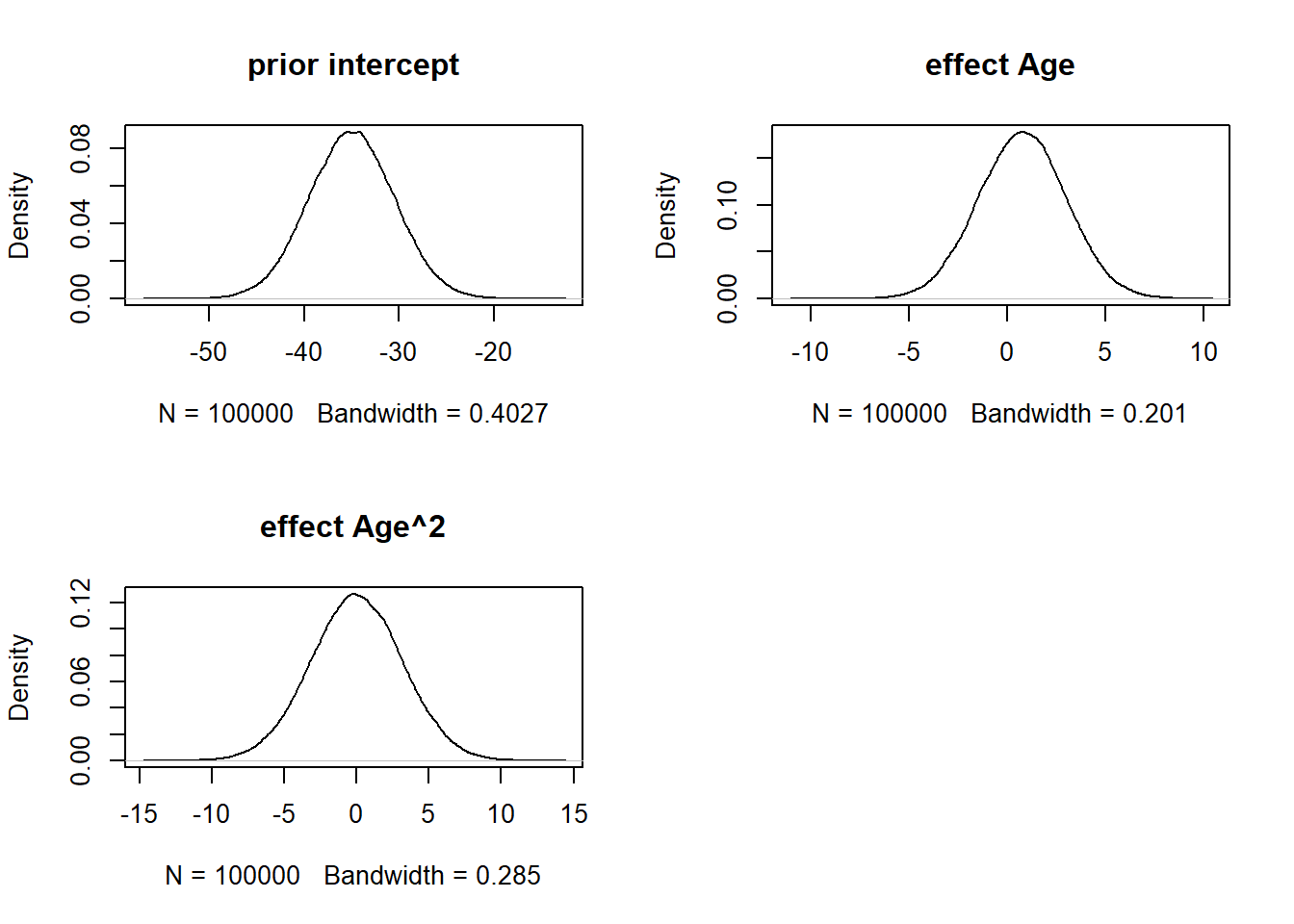

- intercept \(\sim \mathcal{N}(-35, 20)\)

- \(\beta_1 \sim \mathcal{N}(.8, 5)\)

- \(\beta_2 \sim \mathcal{N}(0, 10)\)

- \(\in \sim IG(.5, .5)\) This is an uninformative prior for the residual variance, which has been found to perform well in simulation studies.

It is a good idea to plot these distribution to see how they look. To do so, one easy way is to sample a lot of values from one of these distributions and make a density plot out of it, see the code below. Replace the ‘XX’ with the values of the hyperparameters.

par(mfrow = c(2,2))

plot(density(rnorm(n = 100000, mean = XX, sd = sqrt(XX))), main = "prior intercept") # the rnorm function uses the standard devation instead of variance, that is why we use the sqrt

plot(density(rnorm(n = 100000, mean = XX, sd = sqrt(XX))), main = "effect Age")

plot(density(rnorm(n = 100000, mean = XX, sd = sqrt(XX))), main = "effect Age^2")Click to show result

par(mfrow = c(2,2))

plot(density(rnorm(n = 100000, mean = -35, sd = sqrt(20))), main = "prior intercept") # the rnorm function uses the standard deviation instead of variance, that is why we use the sqrt

plot(density(rnorm(n = 100000, mean = .8, sd = sqrt(5))), main = "effect Age")

plot(density(rnorm(n = 100000, mean = 0, sd = sqrt(10))), main = "effect Age^2")

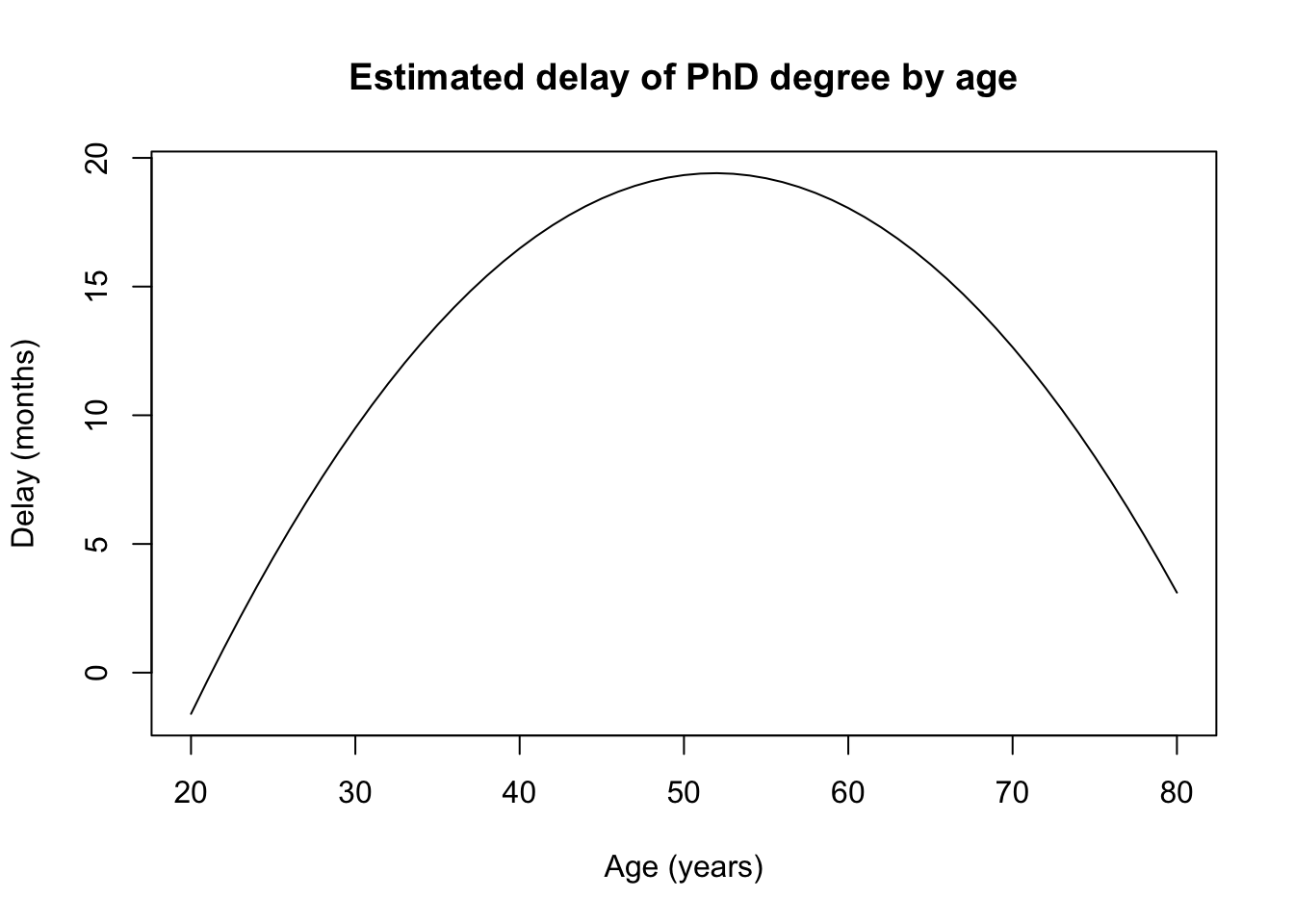

We can also plot what the expected delay would be (like we did in the brms regression assignment) given these priors. With these priors the regression formula would be: \(delay=-35+ .8*age + 0*age^2\). These are just the means of the priors and do not yet qualify the different levels of uncertainty. Replace the ‘XX’ in the following code with the prior means.

years <- 20:80

delay <- XX + XX*years + XX*years^2

plot(years, delay, type = "l")Click to show result

years <- 20:80

delay <- -35 + .8*years + 0*years^2

plot(years, delay, type= "l")

Step 2: Run the model and check for convergence

To run a multiple regression with brms, you first specify the model, then fit the model and finally acquire the summary (similar to the frequentist model using lm()). The model is specified as follows:

- A dependent variable we want to predict.

- A “~”, that we use to indicate that we now give the other variables of interest. (comparable to the ‘=’ of the regression equation).

- The different independent variables separated by the summation symbol ‘+’.

- Finally, we insert that the dependent variable has a variance and that we want an intercept.

- We do set a seed to make the results exactly reproducible.

- To specify priors, using the

set_prior()function. Be careful, Stan uses standard deviations instead of variance in the normal distribution. The standard deviations is the square root of the variance, so a variance of 5 corresponds to a standard deviation of 2.24 and a variance of 10 corresponds to a standard deviation of 3.16. - To place a prior on the fixed intercept, one needs to include

0 + Intercept. See here for an explanation.

There are many other options we can select, such as the number of chains how many iterations we want and how long of a warm-up phase we want, but we will just use the defaults for now.

For more information on the basics of brms, see the website and vignettes

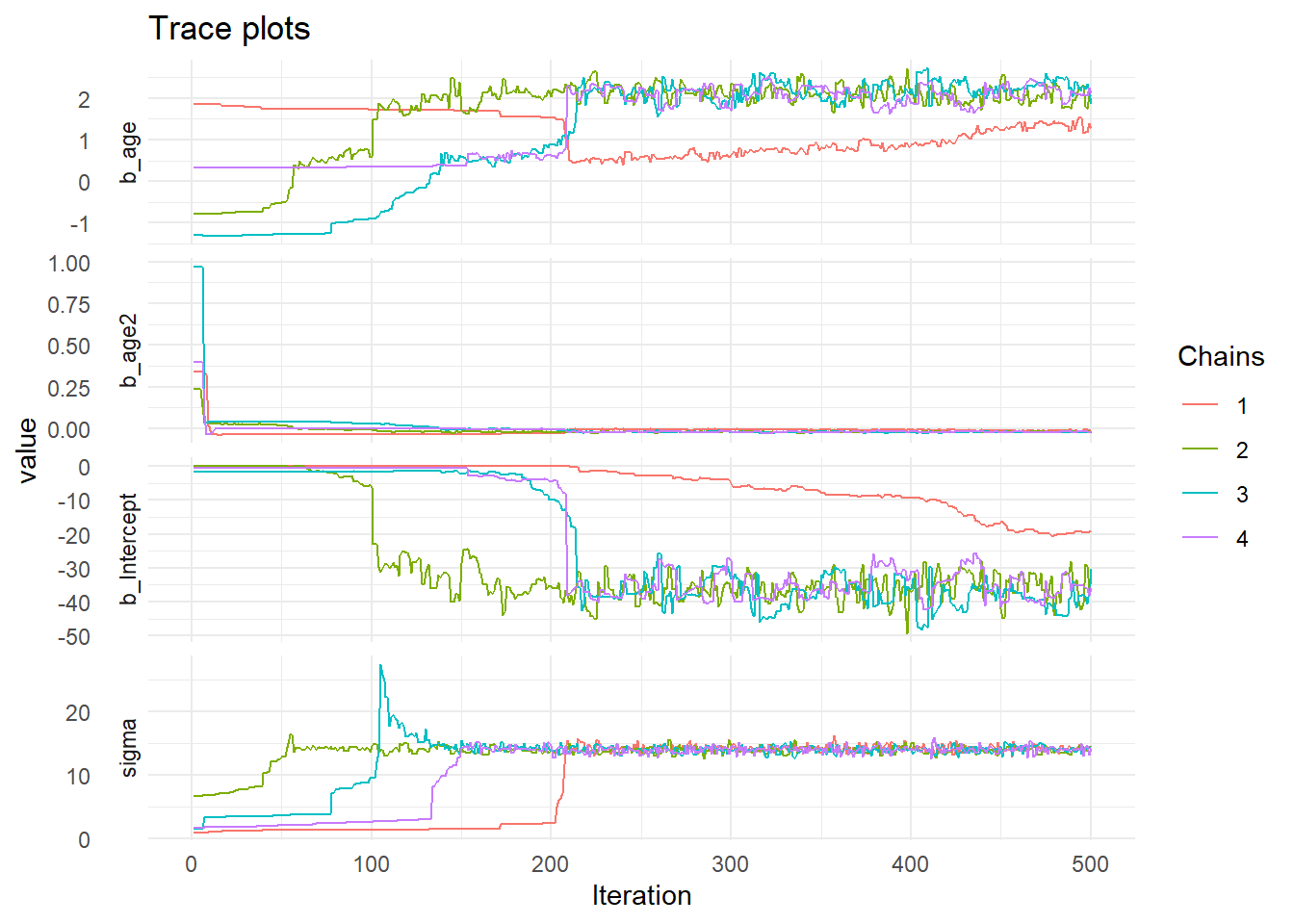

2. Does the trace-plot exhibit convergence?

First we run the analysis with only a short burnin period of 250 samples and then take another 250 samples (iter includes the warmup samples).

The following code is how to specify the regression model:

# 1) set the priors

priors_inf <- c(set_prior("normal(.8, 2.24)", class = "b", coef = "age"),

set_prior("normal(0, 3.16)", class = "b", coef = "age2"),

set_prior("normal(-35, 4.47)", class = "b", coef = "Intercept"),

set_prior("inv_gamma(.5,.5)", class = "sigma"))

# 2) specify the model

model_few_samples <- brm(formula = diff ~ 0 + Intercept + age + age2,

data = dataPHD,

prior = priors_inf,

warmup = 250,

iter = 500,

seed = 12345)Now we can plot the trace plots.

modeltransformed <- ggs(model_few_samples) # the ggs function transforms the BRMS output into a longformat tibble, that we can use to make different types of plots.

ggplot(filter(modeltransformed, Parameter %in% c("b_Intercept", "b_age", "b_age2", "sigma")),

aes(x = Iteration,

y = value,

col = as.factor(Chain)))+

geom_line()+

facet_grid(Parameter ~ .,

scale = 'free_y',

switch = 'y')+

labs(title = "Trace plots",

col = "Chains") +

theme_minimal()

Alternatively, you can simply make use of the built-in plotting capabilities of Rstan.

mcmc_plot(model_few_samples, type = "trace")No divergences to plot.

Note that the first option using gss and ggplot displays all iterations, including warmup (i.e., burnin), whereas the built-in mcmc_plot function removes the warmup iterations by default.

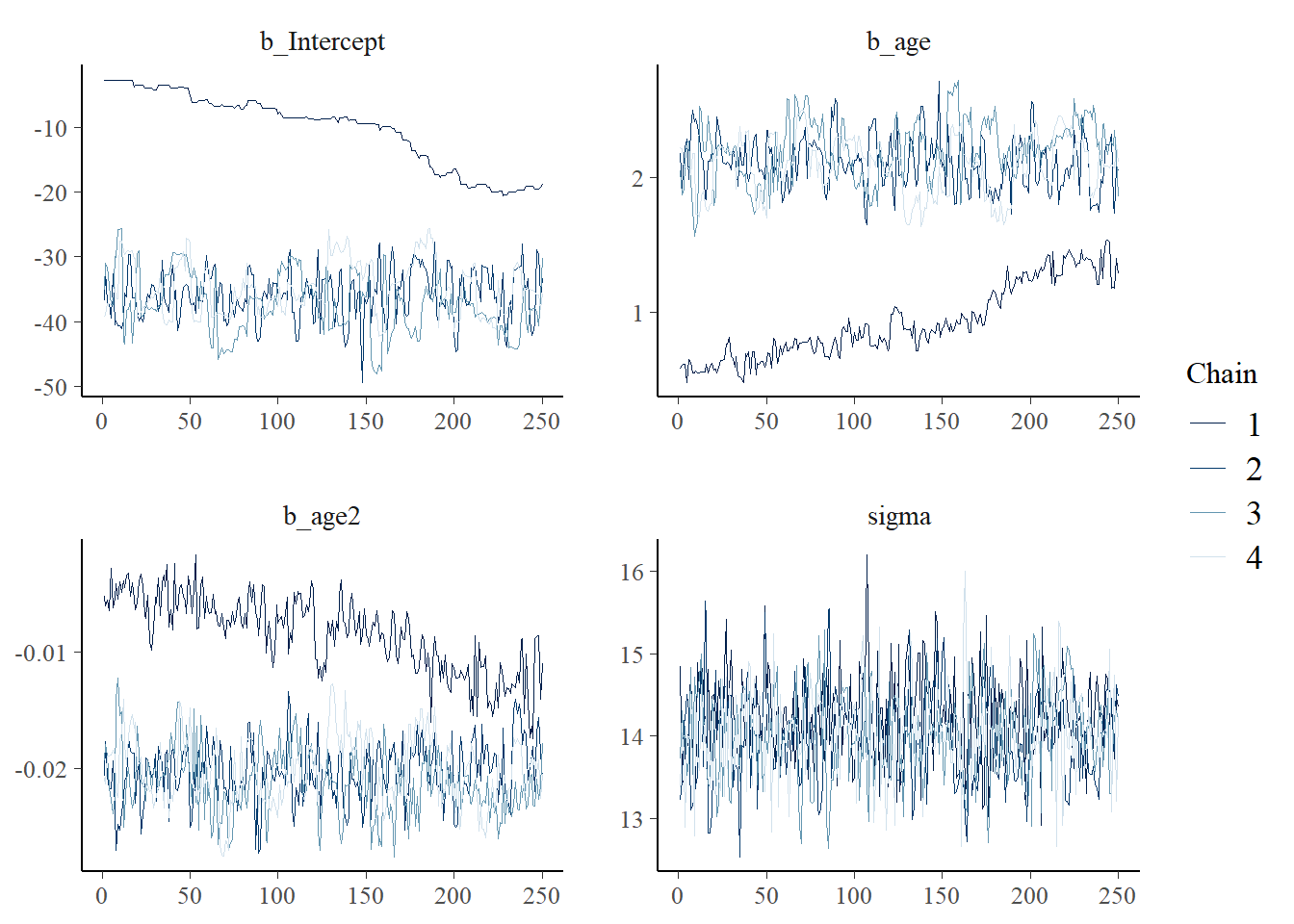

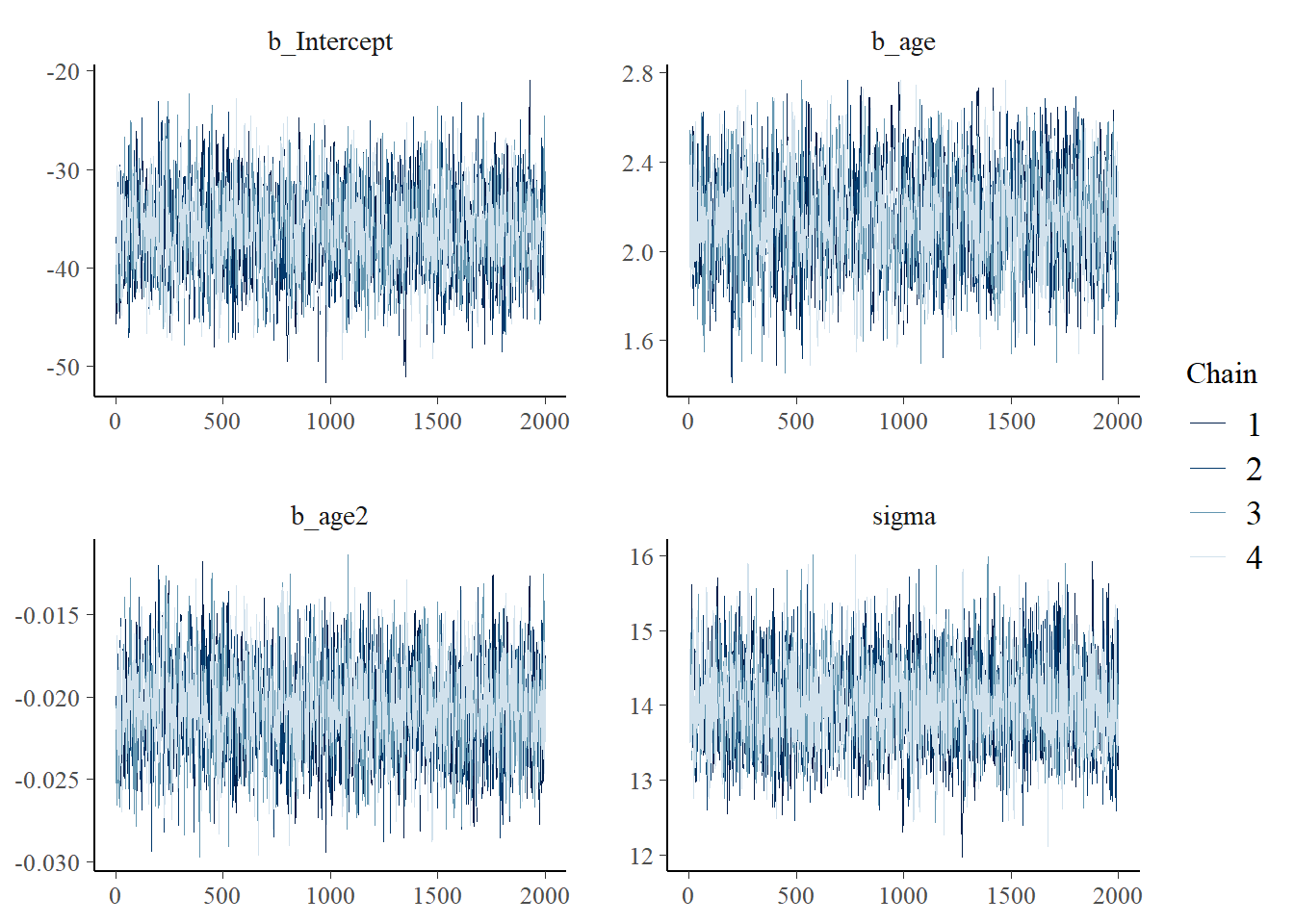

The trace (caterpillar) plots suggest that the chains are not neatly converged (we ideally want one “big, fat caterpillar”, like the one for sigma). This indicates we need more samples.

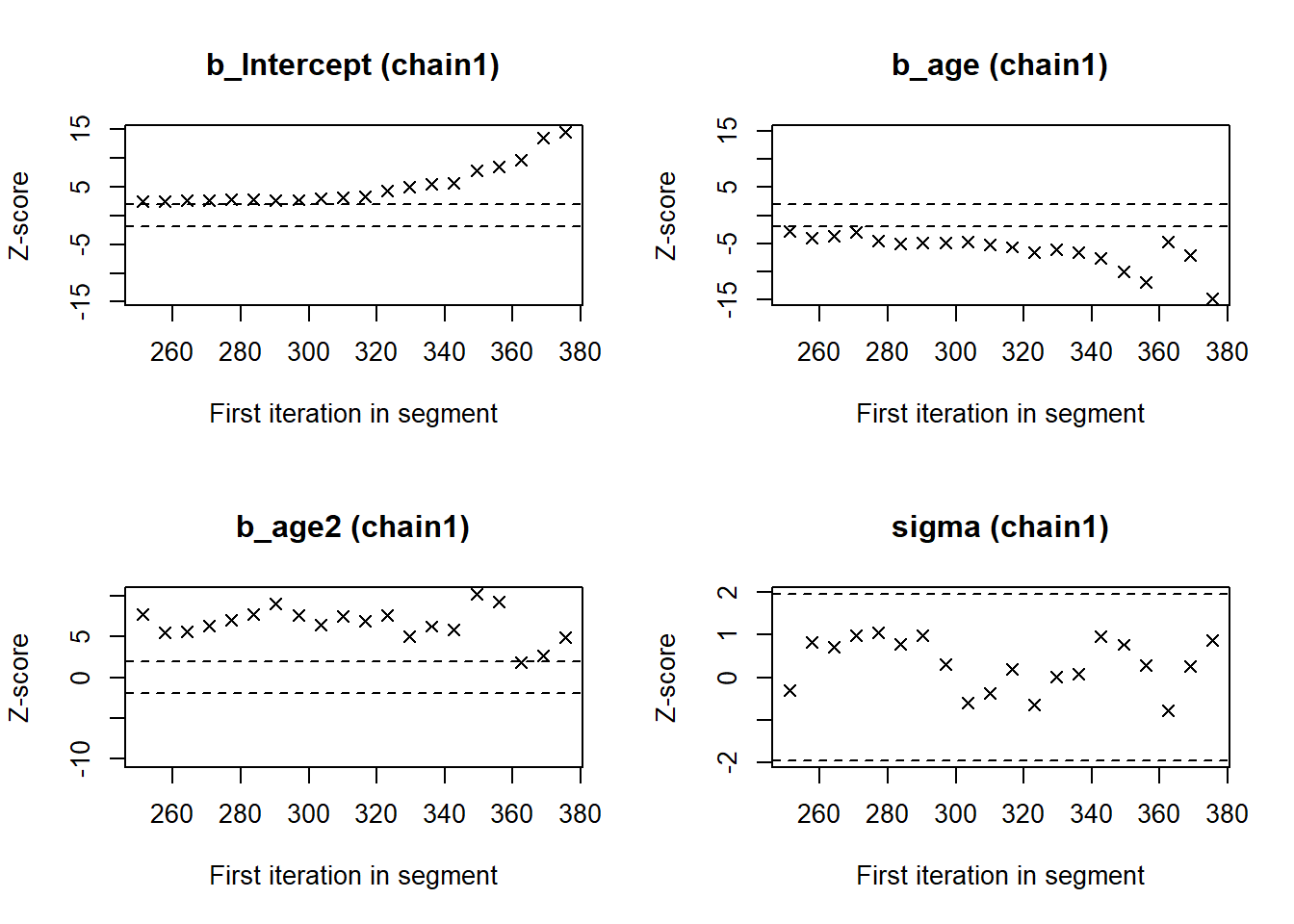

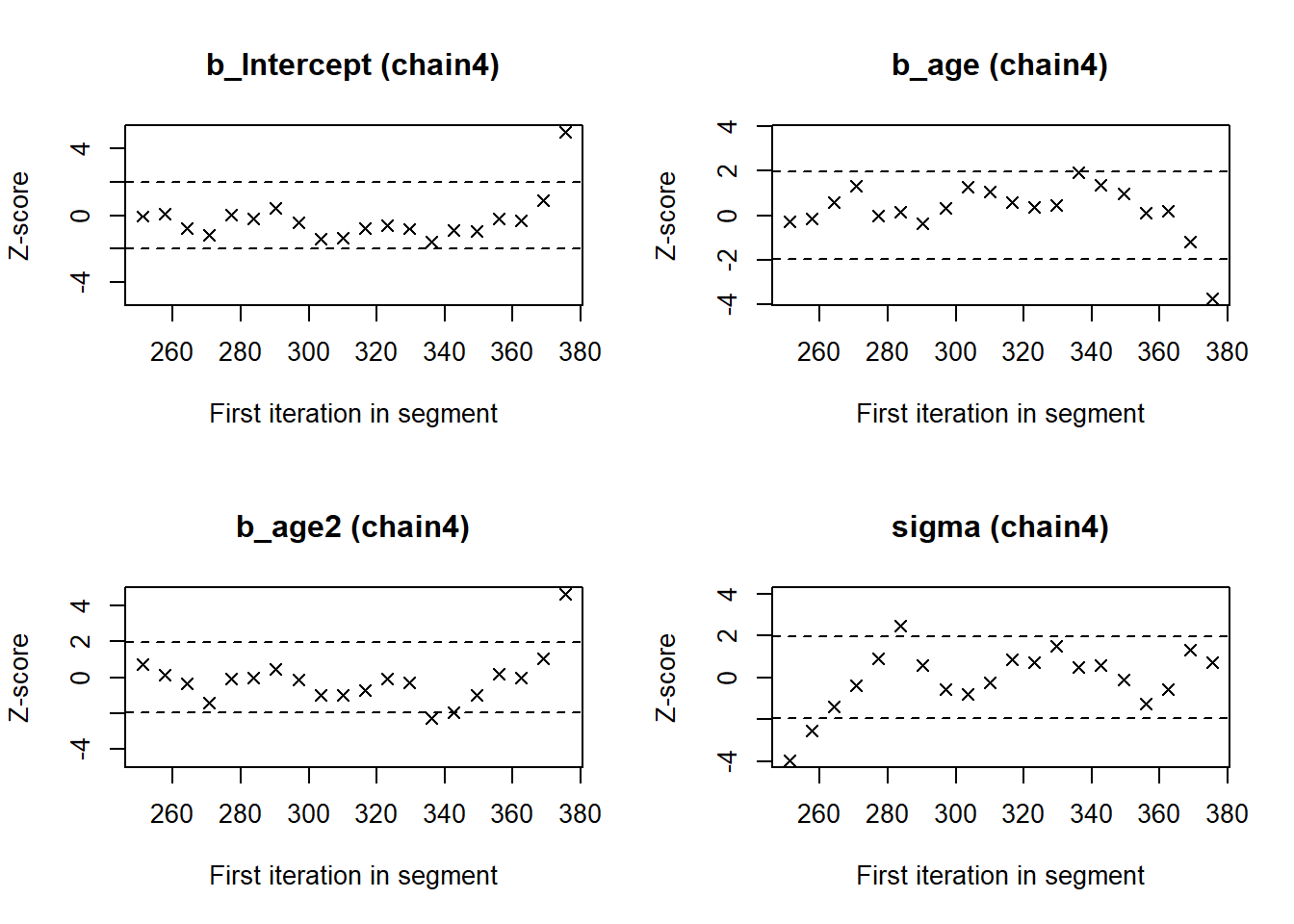

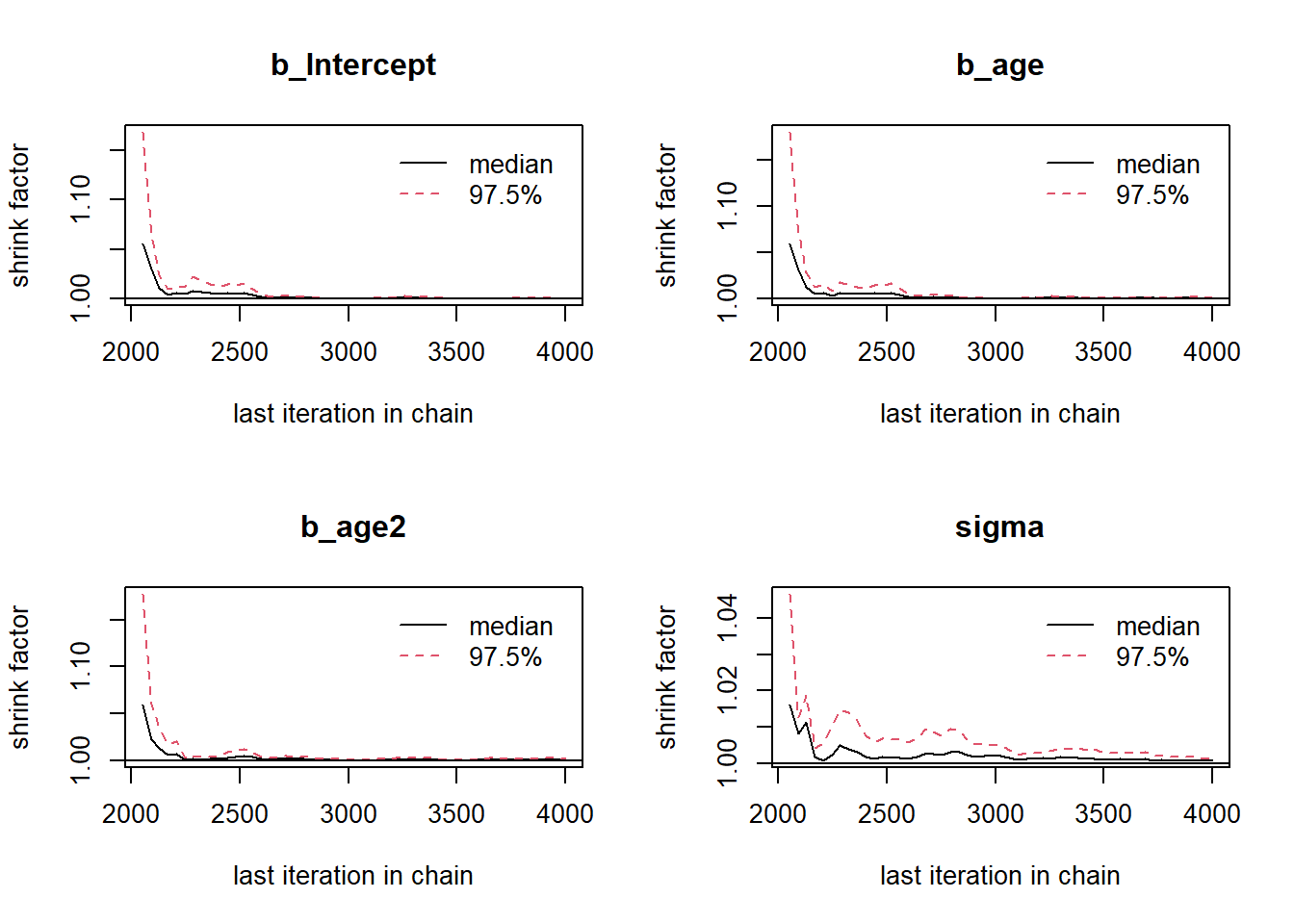

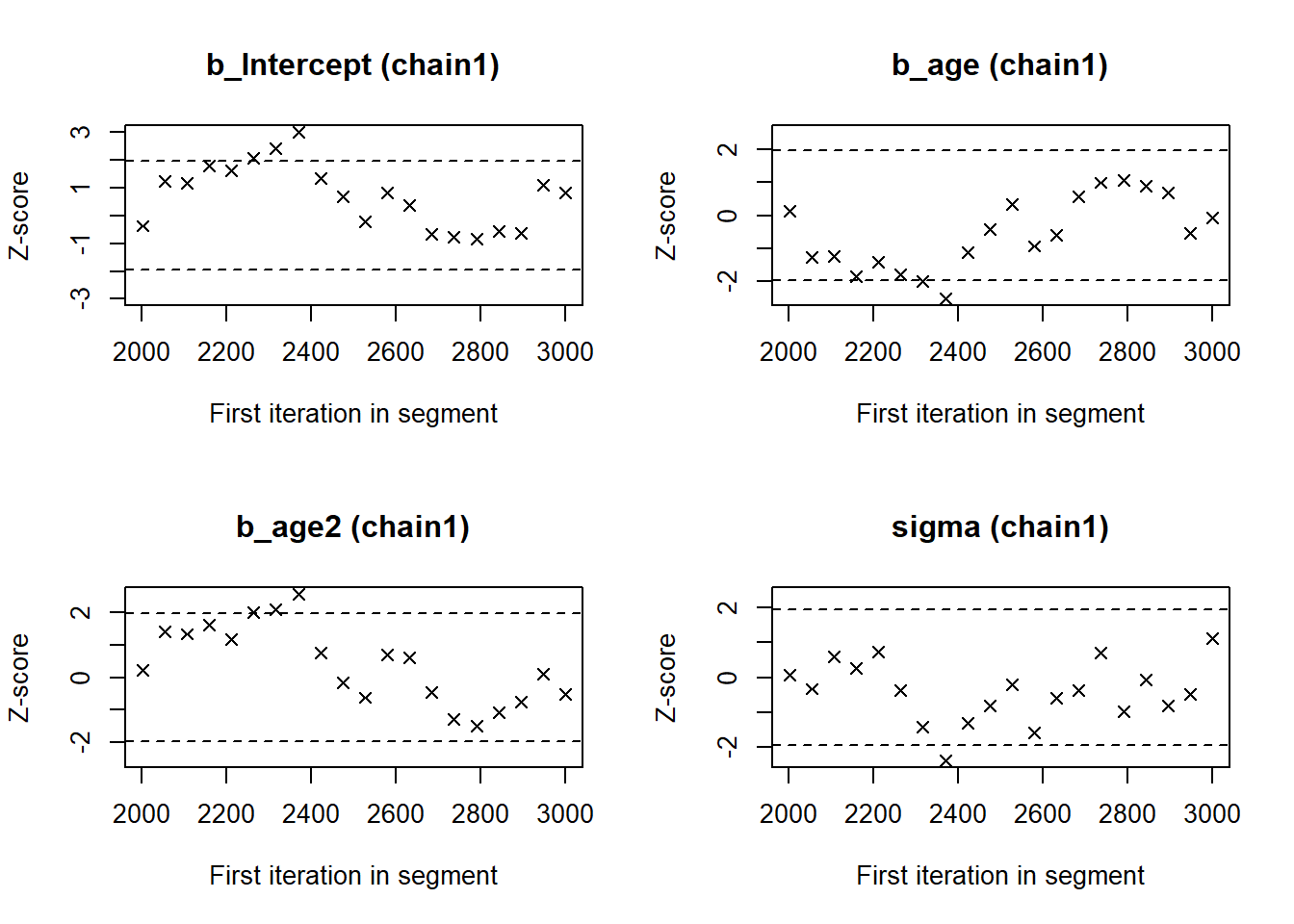

We can check if the chains converged by having a look at the convergence diagnostics. Two of these diagnostics of interest include the Gelman and Rubin diagnostic and the Geweke diagnostic.

- The Gelman-Rubin Diagnostic shows the PSRF values (using the within and between chain variability). You should look at the Upper CI/Upper limit, which are all should be close to 1. If they aren’t close to 1, you should use more iterations. Note: The Gelman and Rubin diagnostic is also automatically given in the summary of

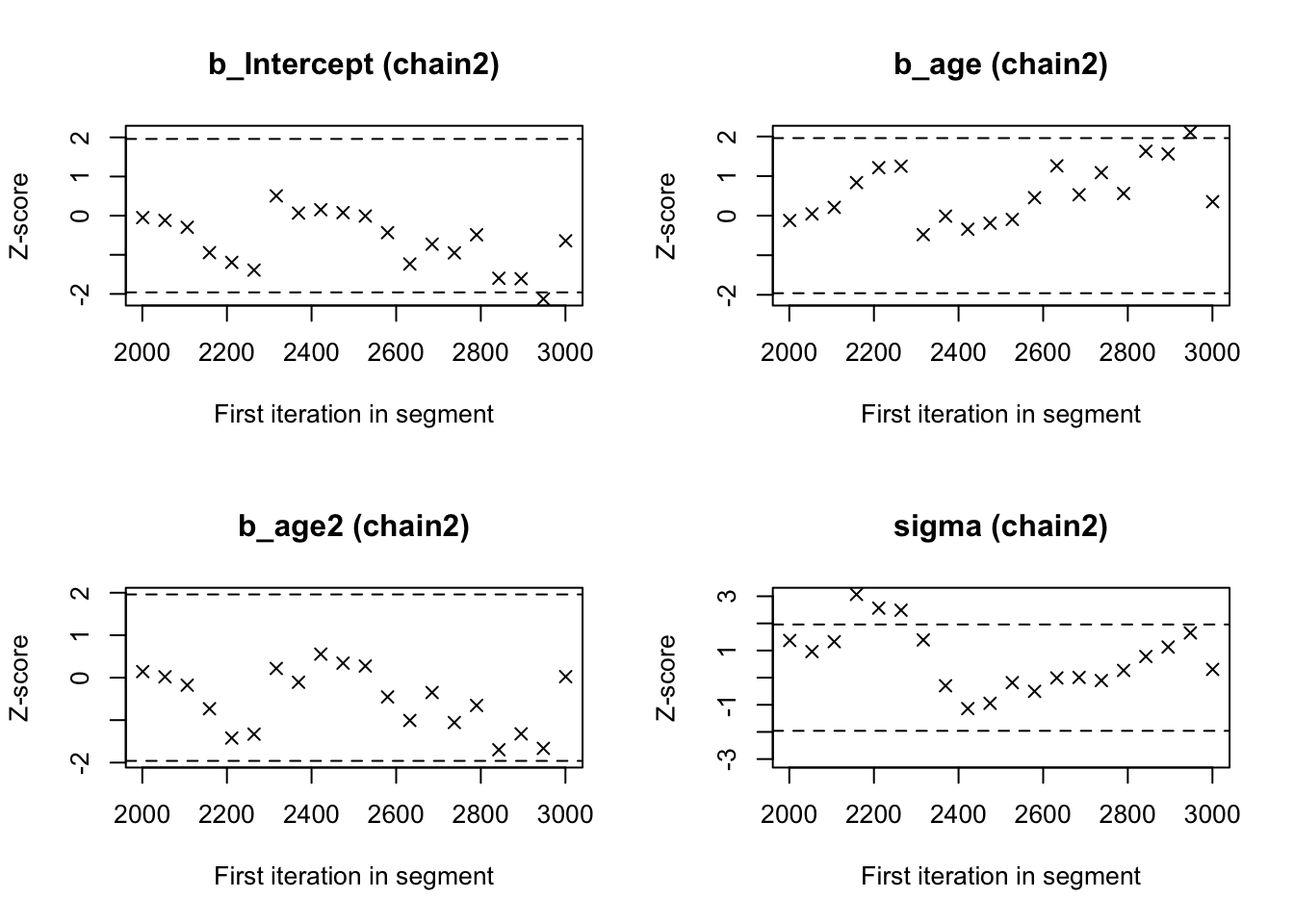

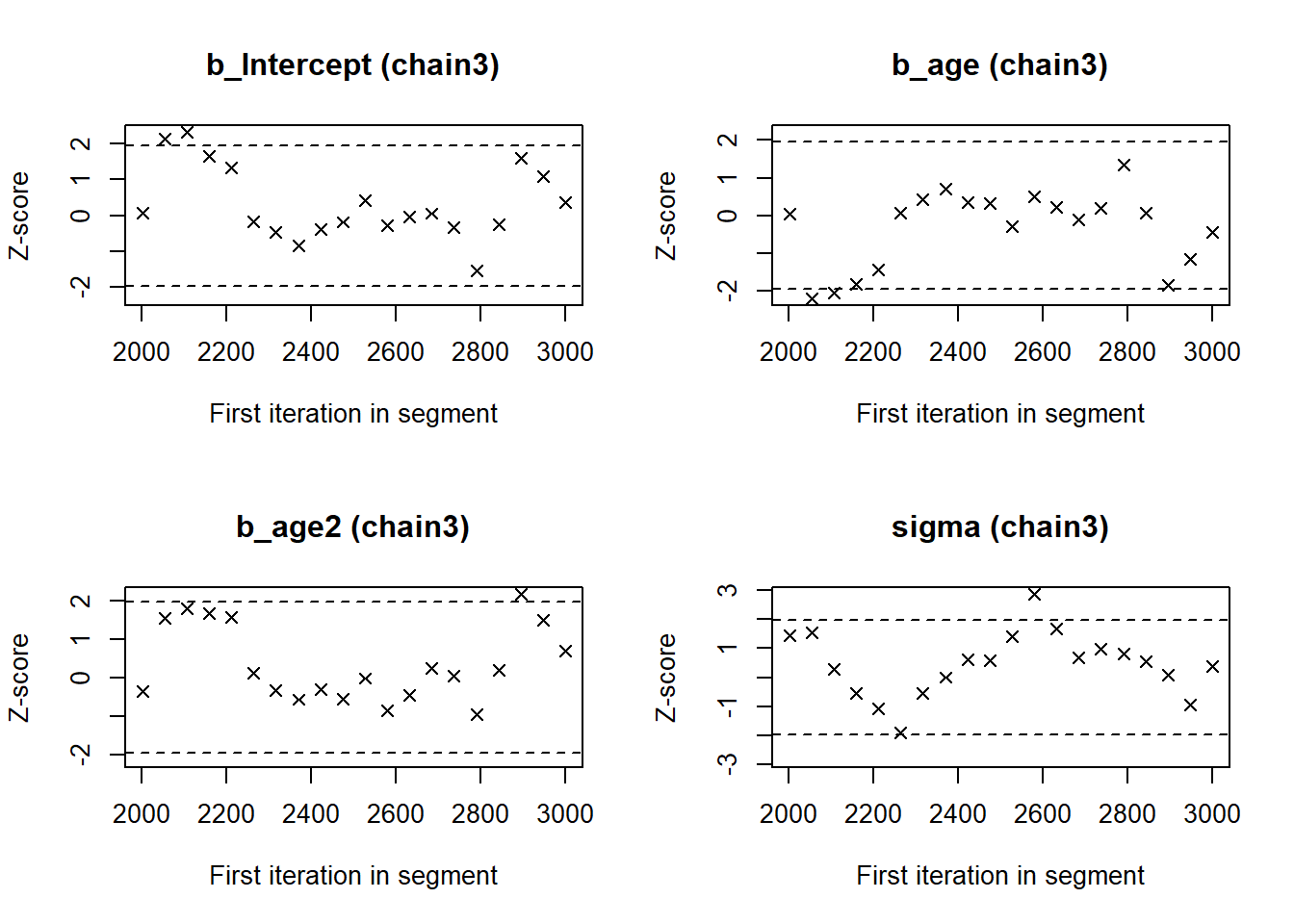

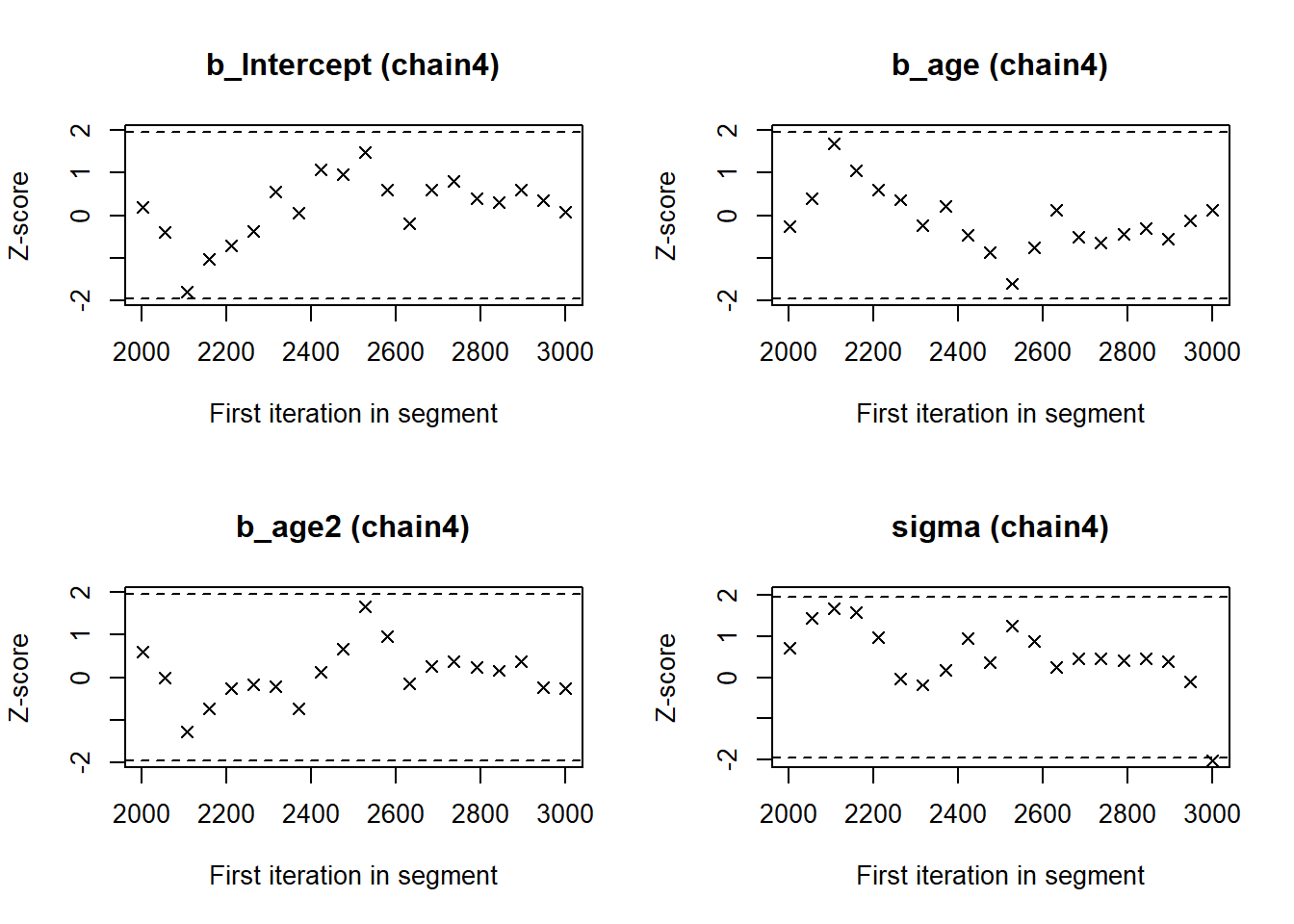

brmsunder the column Rhat - The Geweke Diagnostic shows the z-scores for a test of equality of means between the first and last parts of each chain, which should be <1.96. A separate statistic is calculated for each variable in each chain. In this way it check whether a chain has stabilized. If this is not the case, you should increase the number of iterations. In the plots you should check how often values exceed the boundary lines of the z-scores. Scores above 1.96 or below -1.96 mean that the two portions of the chain significantly differ and full chain convergence was not obtained.

To obtain the Gelman and Rubin diagnostic use:

modelposterior <- as.mcmc(model_few_samples) # with the as.mcmc() command we can use all the CODA package convergence statistics and plotting options

gelman.diag(modelposterior[, 1:4])Potential scale reduction factors:

Point est. Upper C.I.

b_Intercept 3.06 5.34

b_age 2.95 5.12

b_age2 2.42 4.00

sigma 1.02 1.07

Multivariate psrf

2.58gelman.plot(modelposterior[, 1:4])

To obtain the Geweke diagnostic use:

geweke.diag(modelposterior[, 1:4])[[1]]

Fraction in 1st window = 0.1

Fraction in 2nd window = 0.5

b_Intercept b_age b_age2 sigma

5.648 -6.064 5.868 2.308

[[2]]

Fraction in 1st window = 0.1

Fraction in 2nd window = 0.5

b_Intercept b_age b_age2 sigma

0.5428 -0.6873 1.1920 -2.0887

[[3]]

Fraction in 1st window = 0.1

Fraction in 2nd window = 0.5

b_Intercept b_age b_age2 sigma

1.5111 -1.4408 2.0360 -0.9567

[[4]]

Fraction in 1st window = 0.1

Fraction in 2nd window = 0.5

b_Intercept b_age b_age2 sigma

-1.72162 1.76916 -2.49830 0.05041 geweke.plot(modelposterior[, 1:4])

These statistics confirm that the chains have not converged. Therefore, we run the same analysis with more samples (and more burnin samples).

model <- brm(formula = diff ~ 0 + Intercept + age + age2,

data = dataPHD,

prior = priors_inf,

warmup = 2000,

iter = 4000,

seed = 12345)Obtain the trace plots again.

mcmc_plot(model, type = "trace")No divergences to plot.

Obtain the Gelman and Rubin diagnostic again.

modelposterior <- as.mcmc(model) # with the as.mcmc() command we can use all the CODA package convergence statistics and plotting options

gelman.diag(modelposterior[, 1:4])Potential scale reduction factors:

Point est. Upper C.I.

b_Intercept 1 1.00

b_age 1 1.00

b_age2 1 1.00

sigma 1 1.01

Multivariate psrf

1gelman.plot(modelposterior[, 1:4])

Obtain Geweke diagnostic again.

geweke.diag(modelposterior[, 1:4])

geweke.plot(modelposterior[, 1:4])

Now we see that the Gelman and Rubin diagnostic (PRSF) is close to 1 for all parameters and the Geweke diagnostic is not > 1.96.

3. Does convergence remain after doubling the number of iterations?

As is recommended in the WAMBS checklist, we double the amount of iterations to check for local convergence.

model_doubleiter <- brm(formula = diff ~ 0 + Intercept + age + age2,

data = dataPHD,

prior = priors_inf,

warmup = 4000,

iter = 8000,

seed = 12345)You should again have a look at the above-mentioned convergence statistics, but we can also compute the relative bias to inspect if doubling the number of iterations influences the posterior parameter estimates (\(bias= 100*\frac{(model \; with \; double \; iteration \; - \; initial \; converged \; model )}{initial \; converged \; model}\)).

You should evaluate the relative bias in combination with substantive knowledge about the metric of the parameter of interest to determine when levels of relative deviation are negligible or problematic. For example, with a regression coefficient of 0.001, a 5% relative deviation level might not be substantively relevant. However, with an intercept parameter of 50, a 10% relative deviation level might be quite meaningful. The specific level of relative deviation should be interpreted in the substantive context of the model. Some examples of interpretations are:

- if relative deviation is < |5|%, then do not worry;

- if relative deviation > |5|%, then rerun with 4x nr of iterations.

Question: calculate the relative bias. Are you satisfied with number of iterations, or do you want to re-run the model with even more iterations?

To get the relative bias simply extract the means of the regression coefficients and other parameters (ignore lp__ for now) using posterior_summary for the two different analyses and compute the bias.

Click to show result

round(100*((posterior_summary(model_doubleiter)[,"Estimate"] - posterior_summary(model)[,"Estimate"]) / posterior_summary(model)[,"Estimate"]), 4)b_Intercept b_age b_age2 sigma lprior lp__

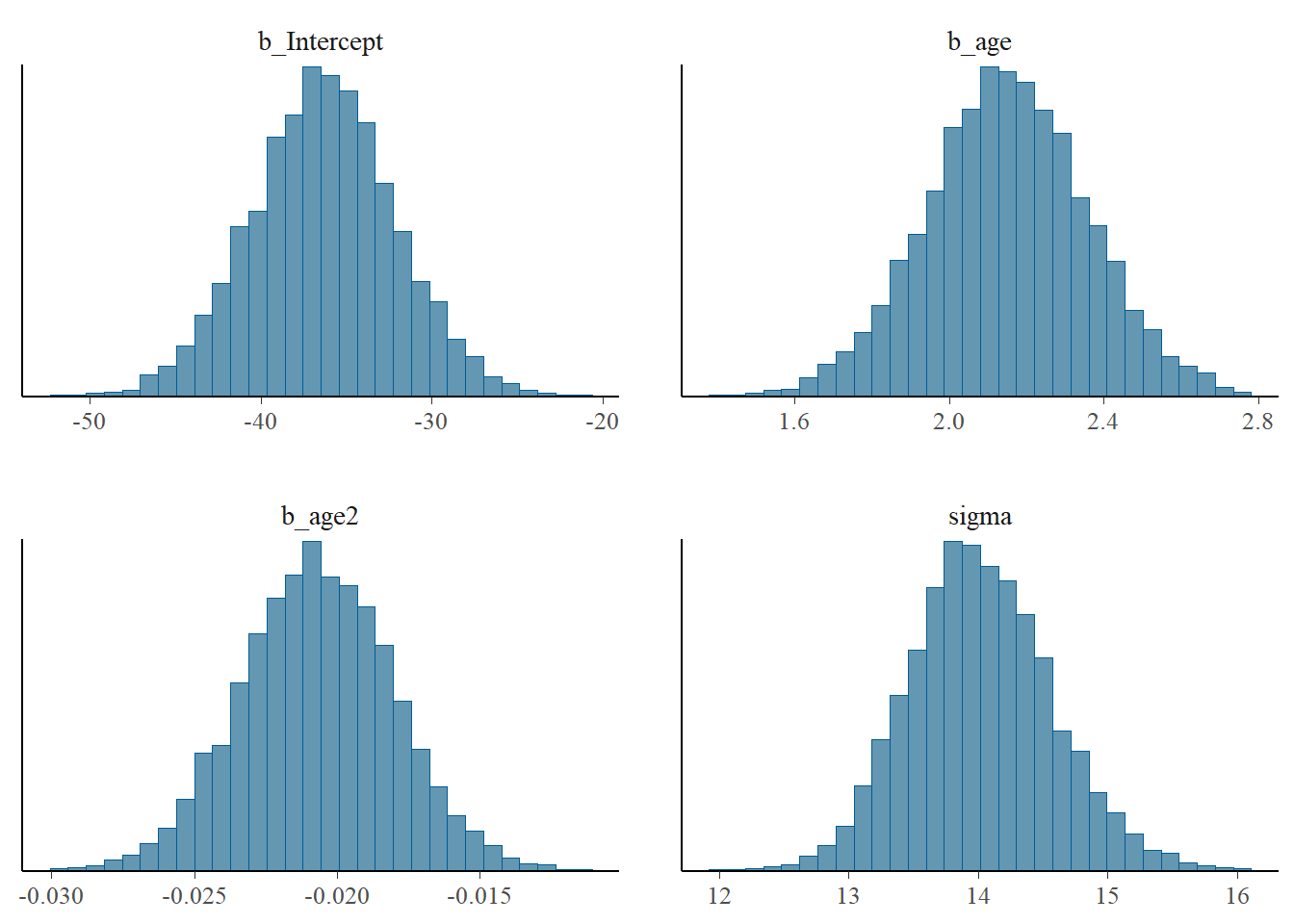

0.0075 -0.0133 -0.0959 -0.0823 -0.0683 0.0001 4. Does the posterior distribution histogram have enough information?

By having a look at the posterior distribution histogram mcmc_plot(model, type = "hist"), we can check if it has enough information.

Question: What can you conclude about distribution histograms?

mcmc_plot(model, type = "hist")`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

Click to show interpretation

The histograms look smooth and have no gaps or other abnormalities. Based on this, adding more iterations is not necessary. However, if you are not satisfied, you can increase the number of iterations again. Posterior distributions do not have to be symmetrical, but in this example they seem to be.If we compare this with histograms based on the first analysis (with very few iterations), this difference becomes clear:

mcmc_plot(model_few_samples, type = "hist")`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

5. Is the effective sample size sufficient or do the chains exhibit a strong degree of autocorrelation?

The effective sample size (ESS) is a measure of the number of independent samples in a Markov Chain Monte Carlo (MCMC) chain and reflects the efficiency of the algorithm. It accounts for the autocorrelation in the chains, which can reduce the effective number of samples. A higher ESS indicates that the MCMC chain is more efficient and provides more reliable estimates. brms / Stan gives a warning if the number of ESS is too small.

# number of effective samples

effectiveSize(model)b_Intercept b_age b_age2 sigma lprior lp__

2069.628 1976.339 2324.616 3403.014 2364.802 3102.669 # ratio of effective samples / number of iterations

neff_ratio(model)b_Intercept b_age b_age2 sigma lprior lp__

0.2700703 0.2544073 0.2918451 0.4471365 0.3279454 0.3748168 To obtain information about autocorrelation the following syntax can be used:

mcmc_plot(model, type = "acf")Warning: The `facets` argument of `facet_grid()` is deprecated as of ggplot2 2.2.0.

ℹ Please use the `rows` argument instead.

ℹ The deprecated feature was likely used in the bayesplot package.

Please report the issue at <https://github.com/stan-dev/bayesplot/issues/>.

Question: What can you conclude about these autocorrelation plots?

Click to show interpretation

These results show that autocorrelation is quite strong after a few lags (up to 10). This means it is important to make sure we ran the analysis with a lot of samples, because with a high autocorrelation it will take longer until the whole parameter space has been identified. Historically, thinning has been used to reduce autocorrelation in MCMC chains, but this is no longer recommended. Instead, it is better to increase the number of iterations and warmup samples to ensure that the chains are well-mixed and that the effective sample size is sufficient. For more information on autocorrelation check this paper.6. Do the posterior distributions and posterior predictions make substantive sense?

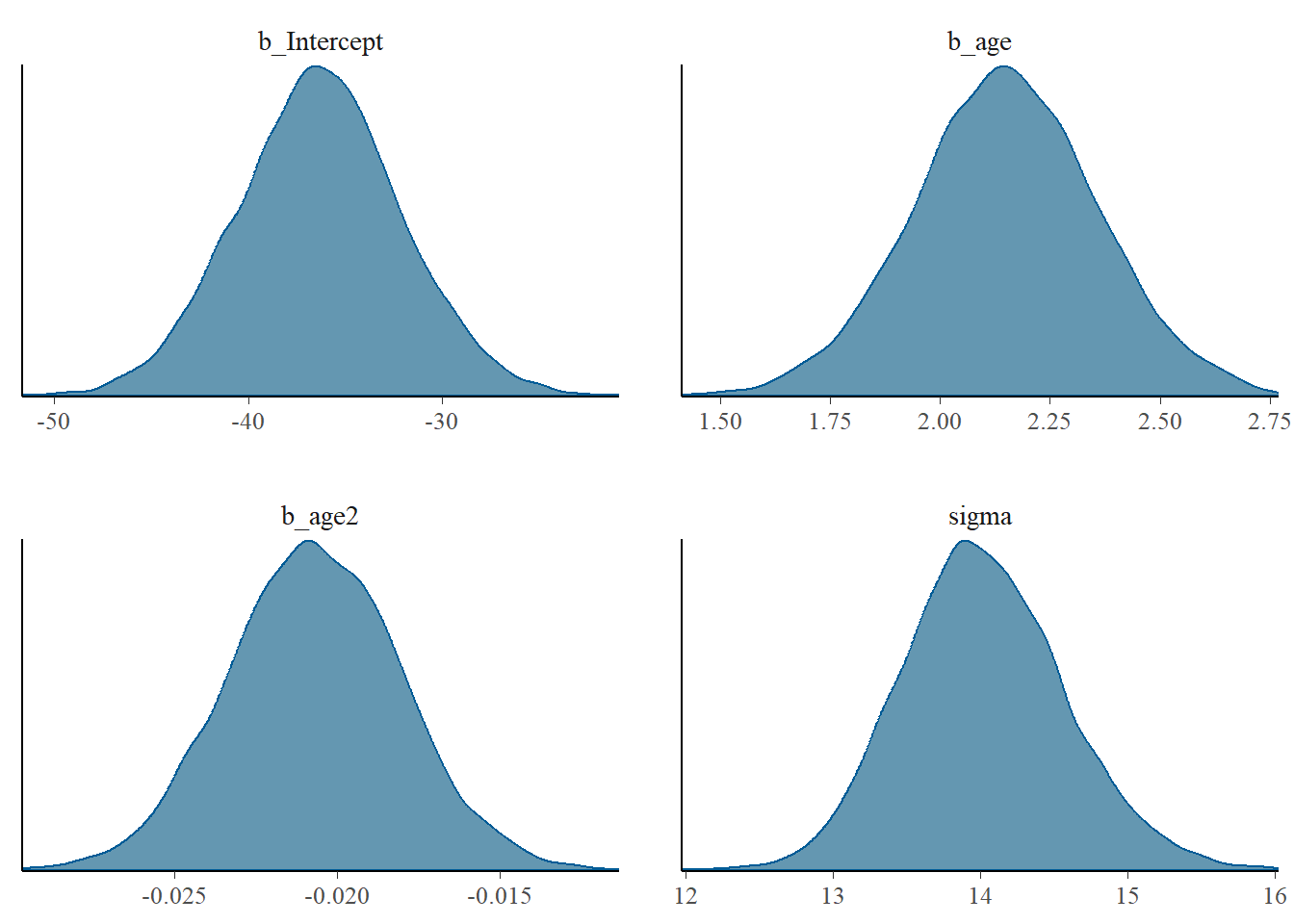

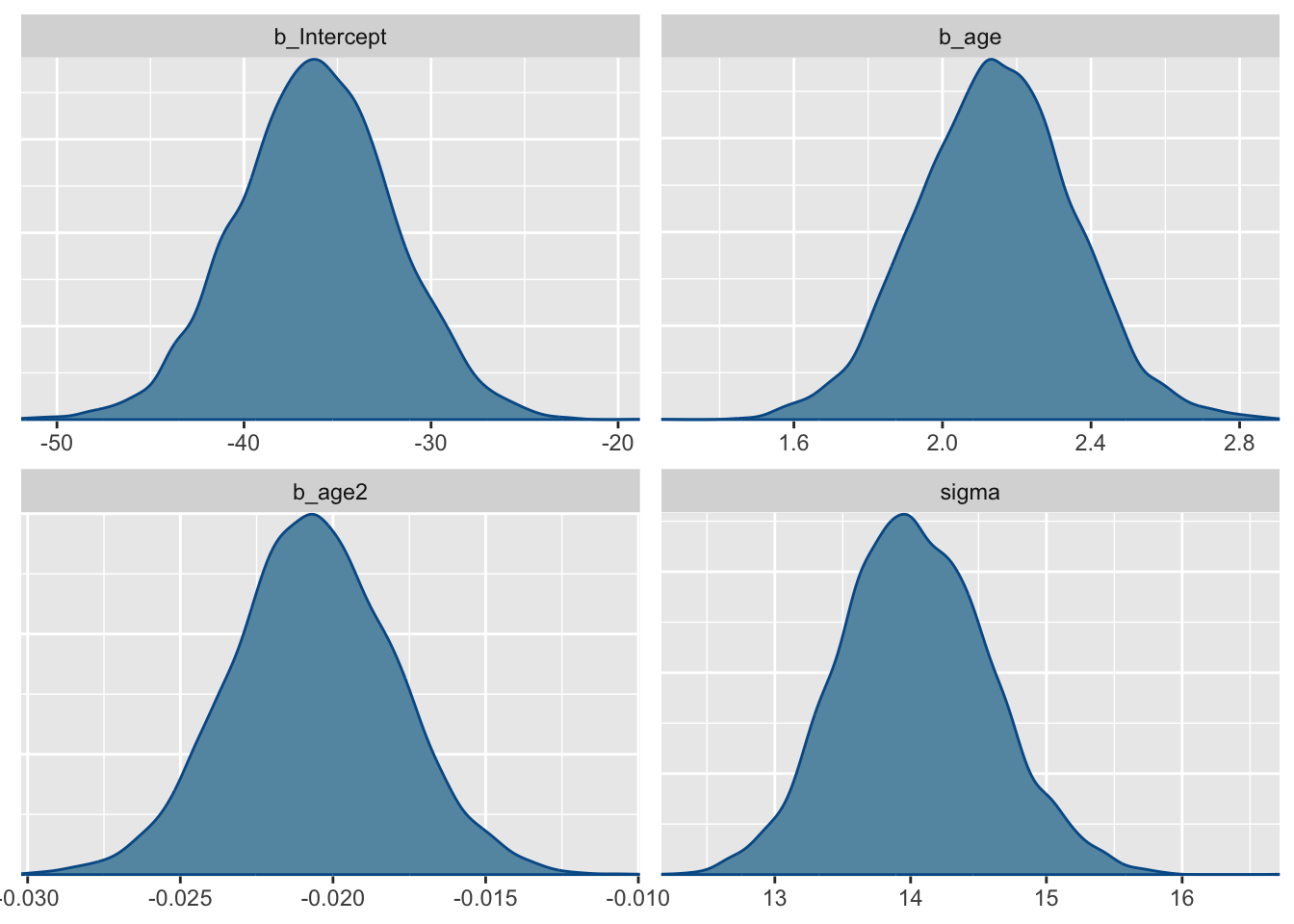

We plot the posterior distributions and see if they are unimodel (one peak), if they are clearly centered around one value, if they give a realistic estimate and if they make substantive sense compared to our prior believes (priors). Here we plot the posteriors of the regression coefficients. If you want you can also plot the mean and the 95% Posterior HPD Intervals.

mcmc_plot(model, type = "dens")

Question: What is your conclusion; do the posterior distributions make sense?

Click to show interpretation

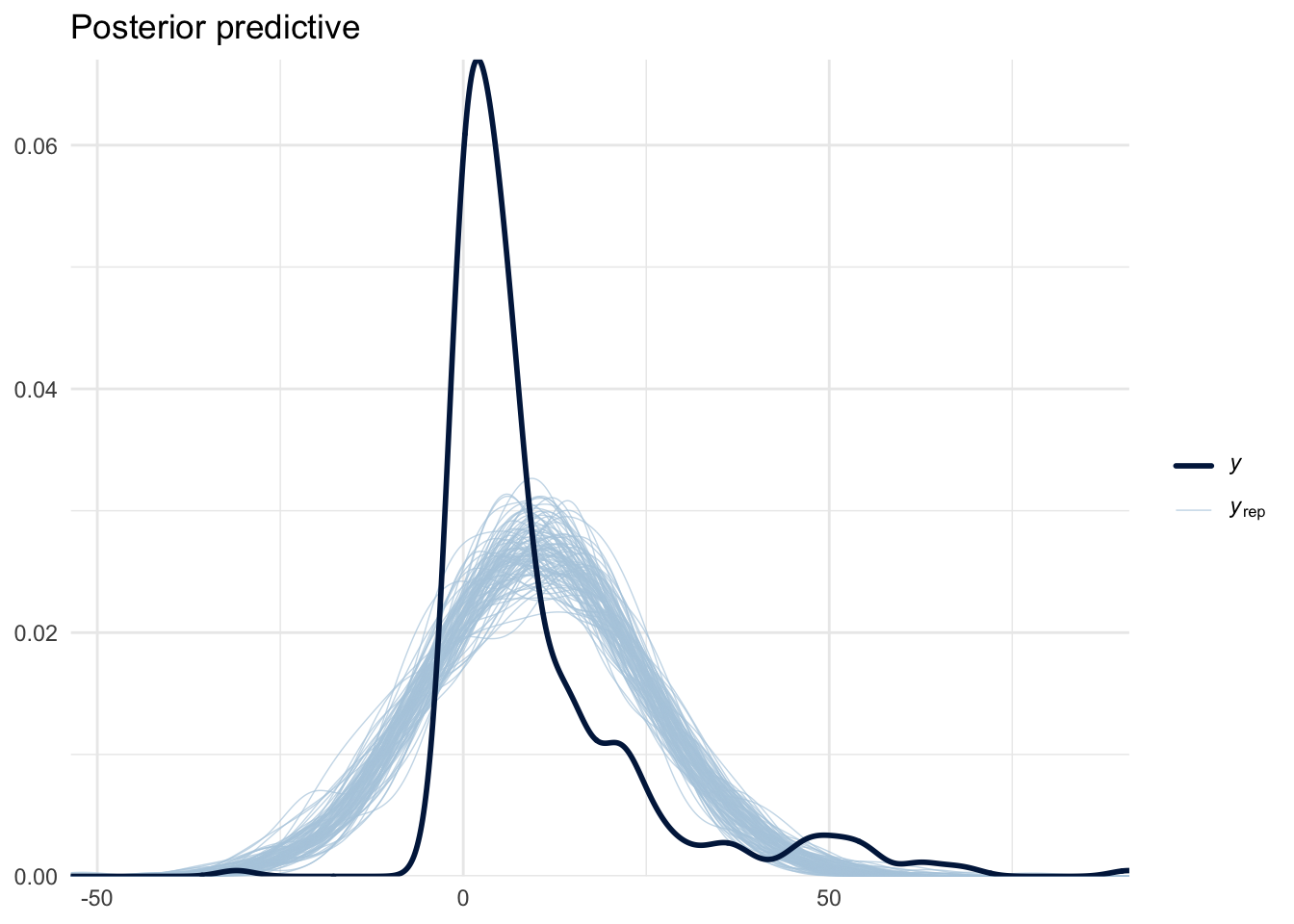

Yes, we see a clear negative intercept, which makes sense since a value of age = 0 for Ph.D is impossible. We also have have plausible ranges of values for the regression coefficients and a positive variance.In addition, do the posterior predictive distributions make sense substantively and in light of the observed data? For this, we want to look at predictions on the response scale the model makes based on the posterior parameter estimates.

bayesplot::pp_check(model, ndraws = 100) + theme_minimal() + ggtitle("Posterior predictive")

Question: Do the posterior predictive distributions make sense substantively and in light of the observed data?

Click to show interpretation

This is often the tricky part. Clearly, we see that the model does not perfectly capture the shape of the observed data, which is not symmetrical. Although we could argue that the fit is good enough for our purposes, we could also investigate how to improve the fit by considering alternative likelihood functions, such as a shifted lognormal distribution, or setting a lower bound based on the minimum possible delay (e.g., 2 years, so 12 months). Do note that while these changes will most likely improve the posterior fit, because they better suit the data-generating process, they will also make interpretation more complex.Step 3: Understanding the exact influence of the priors

First we check the results of the analysis with the priors we used so far.

summary(model) Family: gaussian

Links: mu = identity; sigma = identity

Formula: diff ~ 0 + Intercept + age + age2

Data: dataPHD (Number of observations: 333)

Draws: 4 chains, each with iter = 4000; warmup = 2000; thin = 1;

total post-warmup draws = 8000

Regression Coefficients:

Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

Intercept -36.20 4.20 -44.32 -28.03 1.00 2161 2729

age 2.14 0.21 1.73 2.56 1.00 2035 2645

age2 -0.02 0.00 -0.03 -0.02 1.00 2335 3072

Further Distributional Parameters:

Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

sigma 14.04 0.55 12.99 15.17 1.00 3577 3741

Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

and Tail_ESS are effective sample size measures, and Rhat is the potential

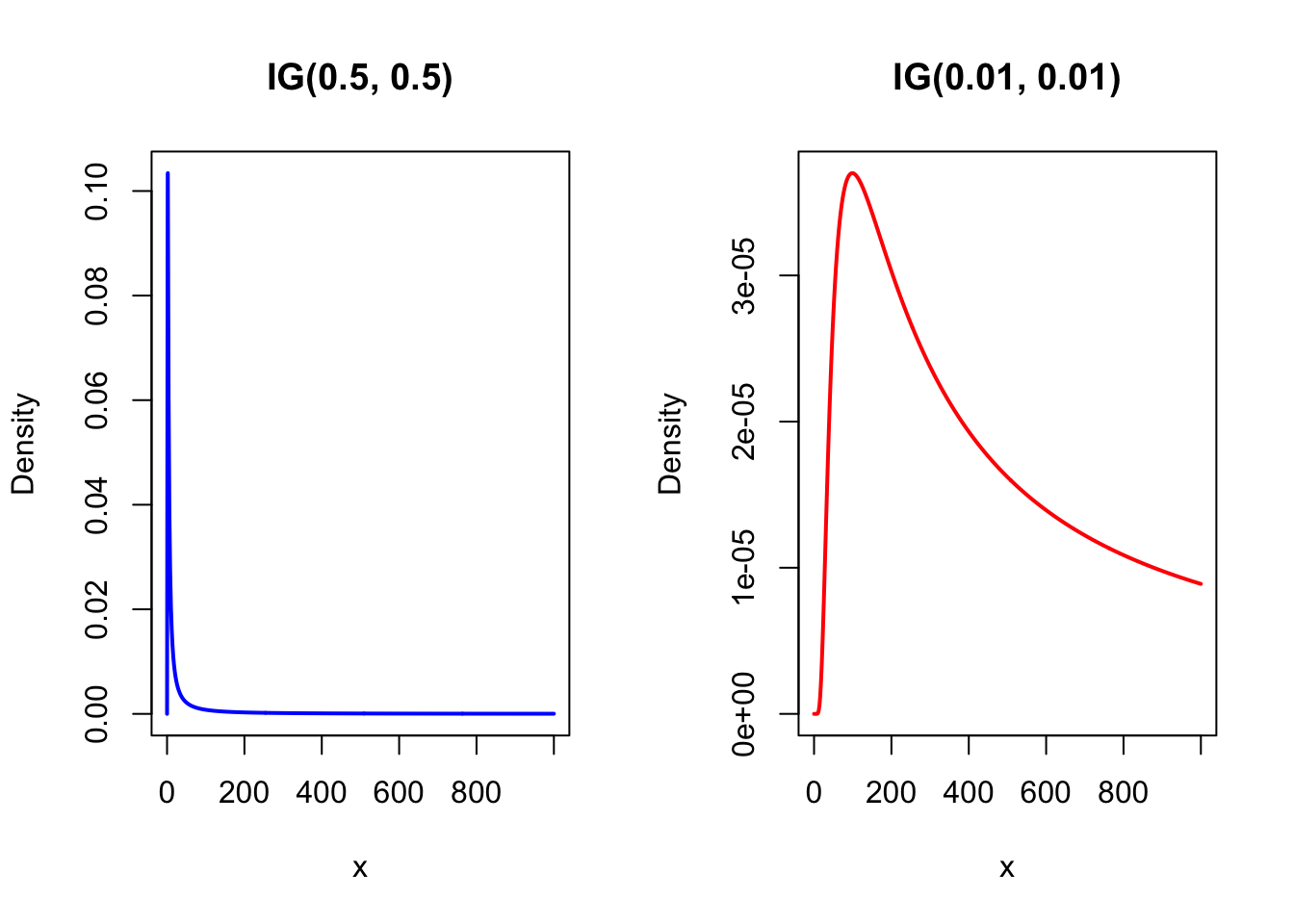

scale reduction factor on split chains (at convergence, Rhat = 1).7. Do different specification of the variance priors influence the results?

So far we have used the -\(\in \sim IG(.5, .5)\) prior, but we can also use the -\(\in \sim IG(.01, .01)\) prior and see if doing so makes a difference. To quantify this difference we again calculate a relative bias.

Let’s first plot the priors to get an idea of what expectation of the variance they reflect.

# inverse gamma prior

# Create a sequence of x values

x <- seq(0.01, 1000, length.out = 500)

# Compute the density

y1 <- dinvgamma(x, shape = 0.5, scale = 0.5)

y2 <- dinvgamma(x, shape = 0.01, scale = 0.01)

# Plot the Inverse Gamma prior

par(mfrow = c(1,2))

plot(x, y1, type = "l", lwd = 2, col = "blue",

main = "IG(0.5, 0.5)",

xlab = "x", ylab = "Density")

plot(x, y2, type = "l", lwd = 2, col = "red",

main = "IG(0.01, 0.01)",

xlab = "x", ylab = "Density")

Question: Are the results robust for different specifications of the prior on the residual variance?

| Parameters | Estimate with \(\in \sim IG(.01, .01)\) | Estimate with \(\in \sim IG(.5, .5)\) | Bias |

|---|---|---|---|

| Intercept | |||

| Age | |||

| Age2 | |||

| Residual variance |

# 1) set the priors

priors_inf2 <- c(set_prior("normal(.8, 2.24)", class = "b", coef = "age"),

set_prior("normal(0, 3.16)", class = "b", coef = "age2"),

set_prior("normal(-35, 4.47)", class = "b", coef= "Intercept"),

set_prior("inv_gamma(.01,.01)", class="sigma"))

# 2) specify the model

model.difIG <- brm(formula = diff ~ 0 + Intercept + age + age2,

data = dataPHD,

prior = priors_inf2,

warmup = 2000,

iter = 4000,

seed = 12345)posterior_summary(model.difIG) Estimate Est.Error Q2.5 Q97.5

b_Intercept -36.1755709 4.209273730 -4.448700e+01 -27.9577409

b_age 2.1409849 0.209197245 1.739788e+00 2.5633501

b_age2 -0.0206032 0.002673978 -2.598165e-02 -0.0154425

sigma 14.0467961 0.551600771 1.302136e+01 15.1759260

lprior -14.1870134 0.699843400 -1.621224e+01 -13.6473609

lp__ -1363.6609612 1.420586992 -1.367189e+03 -1361.8808379Click to show result

| Parameters | Estimate with \(\in \sim IG(.01, .01)\) | Estimate with \(\in \sim IG(.5, .5)\) | Bias |

|---|---|---|---|

| Intercept | -36.176 | -36.201 | \(100\cdot \frac{-36.176--36.201 }{-36.201} = -0.07\%\) |

| Age | 2.141 | 2.143 | \(100\cdot \frac{2.141-2.143 }{2.143} = -0.09\%\) |

| Age2 | -0.021 | -0.021 | \(100\cdot \frac{-0.021--0.021 }{-0.021} = -0.19\%\) |

| Residual variance | 14.047 | 14.038 | \(100\cdot \frac{14.047-14.038 }{14.038} = 0.07\%\) |

Extra question: Which of the two settings for the inverse gamma prior on the residual variance do you think is more appropriate here?

8. Is there a notable effect of the prior when compared with non-informative priors?

The default brms priors are non-informative, so we can run the analysis without any specified priors and compare them to the model we have run so far, using the relative bias, to see if there is a large influence of the priors.

Question: What is your conclusion about the influence of the priors on the posterior results?

| Parameters | Estimates with default priors | Estimate with informative priors | Bias |

|---|---|---|---|

| Intercept | |||

| Age | |||

| Age2 | |||

| Residual variance |

From the brms manual we learn that:

- The default prior for population-level effects (including monotonic and category specific effects) is an improper flat prior over the reals.”

- “By default, sigma has a half student-t prior that scales in the same way as the group-level standard deviations.”

We can run the model without our priors and check if doing so strongly influences the results.

model.default <- brm(formula = diff ~ 0 + Intercept + age + age2,

data = dataPHD,

warmup = 1000,

iter = 2000,

seed = 123)

posterior_summary(model.default)You can always check the priors in a given model using the function get_prior().

get_prior(model.default) prior class coef group resp dpar nlpar lb ub source

(flat) b default

(flat) b age (vectorized)

(flat) b age2 (vectorized)

(flat) b Intercept (vectorized)

student_t(3, 0, 7.4) sigma 0 defaultHere we see that the default prior for sigma –based on these data– is a half student-t prior with scale 7.4.

Click to show result

| Parameters | Estimates with default priors | Estimate with informative priors | Bias |

|---|---|---|---|

| Intercept | -47.58 | -36.2 | \(100\cdot \frac{-47.579--36.201 }{-36.201} = 31.43\%\) |

| Age | 2.68 | 2.143 | \(100\cdot \frac{2.68-2.143 }{2.143} = 25.09\%\) |

| Age2 | -0.026 | -0.021 | \(100\cdot \frac{-0.026--0.021 }{-0.021} = 26.22\%\) |

| Residual variance | 14.019 | 14.038 | \(100\cdot \frac{14.019-14.038 }{14.038} = -0.13\%\) |

Question: Which results do you use to draw conclusions?

Click to show interpretation

This really depends on where the priors come from. If for example your informative priors come from a reliable source, you should use them. The most important thing is that you choose your priors accurately, and have good arguments to use them. If not, you shouldn't use really informative priors and use the results based on the non-informative priors.9. Are the results stable from a sensitivity analysis?

If you still have time left, you can adjust the hyperparameters of the priors upward and downward and re-estimating the model with these varied priors to check for robustness.

From the original paper:

“If informative or weakly-informative priors are used, then we suggest running a sensitivity analysis of these priors. When subjective priors are in place, then there might be a discrepancy between results using different subjective prior settings. A sensitivity analysis for priors would entail adjusting the entire prior distribution (i.e., using a completely different prior distribution than before) or adjusting hyperparameters upward and downward and re-estimating the model with these varied priors. Several different hyperparameter specifications can be made in a sensitivity analysis, and results obtained will point toward the impact of small fluctuations in hyperparameter values. […] The purpose of this sensitivity analysis is to assess how much of an impact the location of the mean hyperparameter for the prior has on the posterior. […] Upon receiving results from the sensitivity analysis, assess the impact that fluctuations in the hyperparameter values have on the substantive conclusions. Results may be stable across the sensitivity analysis, or they may be highly instable based on substantive conclusions. Whatever the finding, this information is important to report in the results and discussion sections of a paper. We should also reiterate here that original priors should not be modified, despite the results obtained.”

For more information on this topic, please also refer to this paper.

In addition to sensitivity analyses based on prior settings, you may also include sensitivity checks based on the model structure, such as using a different likelihood function (e.g., the shifted log-normal distribution) or the inclusion of additional predictors or interactions.

10. Is the Bayesian way of interpreting and reporting model results used?

For a summary on how to interpret and report models, please refer to https://www.rensvandeschoot.com/bayesian-analyses-where-to-start-and-what-to-report/

summary(model) Family: gaussian

Links: mu = identity; sigma = identity

Formula: diff ~ 0 + Intercept + age + age2

Data: dataPHD (Number of observations: 333)

Draws: 4 chains, each with iter = 4000; warmup = 2000; thin = 1;

total post-warmup draws = 8000

Regression Coefficients:

Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

Intercept -36.20 4.20 -44.32 -28.03 1.00 2161 2729

age 2.14 0.21 1.73 2.56 1.00 2035 2645

age2 -0.02 0.00 -0.03 -0.02 1.00 2335 3072

Further Distributional Parameters:

Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

sigma 14.04 0.55 12.99 15.17 1.00 3577 3741

Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

and Tail_ESS are effective sample size measures, and Rhat is the potential

scale reduction factor on split chains (at convergence, Rhat = 1).# summary of sampling diagnostics

rhats <- brms::rhat(model)

rhat_max <- sort(rhats, decreasing = T)[1] #sigma

ratios <- neff_ratio(model)

ess <- bayestestR::effective_sample(model, effects = "all")

ess_min <- min(ess$ESS) #b_age Aspects to report:

- estimates + credible intervals: make use of the fact that you have actual indication of uncertainty of the model parameters (not just hypothetical). Also add plots to ease interpretability!

- program and packages used

- discussion of priors (justify choices, and report sensitivity)

- discussion of settings (number of chains, number of interaction, warmup, seeds etc.)

- discussion of sampling diagnostics (e.g., \(\hat{R}\), effective sample size)

- perhaps: model fit or model comparison metrics (e.g., loo, Bayes factors)

Example:

- The estimate for the intercept is -36.2 [-44.32 ; -28.03]

- The estimate for the effect of \(age\) is 2.14 [1.73 ; 2.56]

- The estimate for the effect of \(age^2\) is -0.02 [-0.03 ; -0.02]

We used the brms package (Bürkner, 2017) to fit Bayesian multilevel models, which relies on the Stan language (Carpenter et al., 2017). The model was run with 4 chains, each with 4000 iterations and a warmup of 2000 iterations (total post-warmup \(N=8000\)). The model diagnostics indicated good convergence (largest \(\hat{R}=\) 1.0027 for the residual variance and sufficient effective sample sizes for all parameters (median \(\hat{N}_{\text{eff}}\) = 2161). The smallest \(\hat{N}_{\text{eff}}\) = 2035 for the regression coefficient of age (ratio= 0.25), indicating that there is some autocorrelation in the chains.

We used an linear regression model with a Gaussian likelihood and informative priors derived from (fictitious) previous studies on the intercept \(\sim \mathcal{N}(-35, 20)\), the coefficient of age in years \(\beta_1 \sim \mathcal{N}(.8, 5)\), the coefficient of squared age \(\beta_2 \sim \mathcal{N}(0, 10)\), as we expected a non-linear effect of age, and the residual variance \(\sigma \sim IG(.5, .5)\). This is an uninformative prior for the residual variance, which has been found to perform well in simulation studies. Sensitivity checks on the priors and model specification indicated that the conclusions are robust to different prior settings for the variance (i.e., \(\sigma \sim IG(.01, .01)\). However, result do differ when using diffuse default priors, which results in a lower intercept and stronger effects of age, though the pattern remains the same.

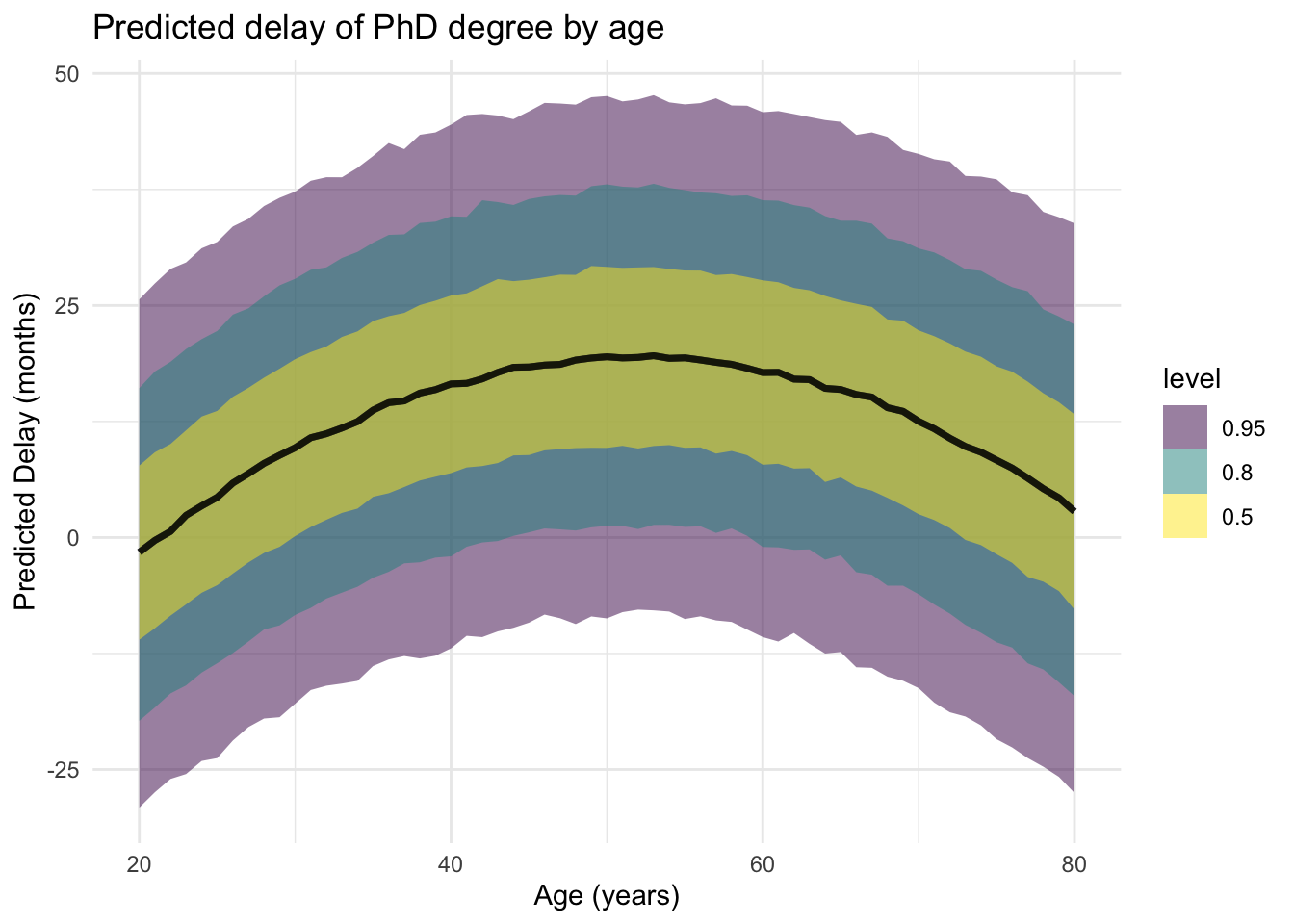

Remember how we plotted the relation between delay and years based on the prior information? Now, do the same with the posterior estimates.

years <- 20:80

delay <- summ1[1,1] + summ1[2,1]*years +(summ1[3,1])*years^2

plot(years, delay, type= "l", xlim= c(20, 80),

xlab = "Age (years)", ylab = "Delay (months)", main = "Estimated delay of PhD degree by age")

# or, more elegantly, using the posterior samples

library(tidybayes)

newdata <- tibble(age = years, age2 = years^2)

model %>%

add_predicted_draws(newdata = newdata) %>%

ggplot(aes(x = age, y = .prediction)) +

stat_lineribbon(alpha = 0.5) +

labs(title = "Predicted delay of PhD degree by age",

y = "Predicted Delay (months)",

x = "Age (years)") +

theme_minimal()

References

Depaoli, S., & Van de Schoot, R. (2017). Improving transparency and replication in Bayesian statistics: The WAMBS-Checklist. Psychological Methods, 22(2), 240.

Link, W. A., & Eaton, M. J. (2012). On thinning of chains in MCMC. Methods in ecology and evolution, 3(1), 112-115.

van Erp, S., Mulder, J., & Oberski, D. L. (2017). Prior sensitivity analysis in default Bayesian structural equation modeling.

Van de Schoot, R., & Depaoli, S. (2014). Bayesian analyses: Where to start and what to report. European Health Psychologist, 16(2), 75-84.

Bürkner, P-C. (2017). Brms: An R Package for Bayesian Multilevel Models Using Stan. Journal of Statistical Software 80(1), 1–28. https://doi.org/10.18637/jss.v080.i01.

Carpenter, B., Gelman, A., Hoffman, M., Lee, D., Goodrich, B., Betancourt, M., Brubaker, M. A., Guo, J., Li, P., & Riddell, A. (2017). Stan: A probabilistic programming language. Journal of Statistical Software, 76. https://doi.org/10.18637/jss.v076.i01

Original Computing Environment

devtools::session_info()─ Session info ───────────────────────────────────────────────────────────────

setting value

version R version 4.3.3 (2024-02-29)

os macOS 15.5

system aarch64, darwin20

ui X11

language (EN)

collate en_US.UTF-8

ctype en_US.UTF-8

tz Europe/Amsterdam

date 2025-07-20

pandoc 3.1.1 @ /Applications/RStudio.app/Contents/Resources/app/quarto/bin/tools/ (via rmarkdown)

─ Packages ───────────────────────────────────────────────────────────────────

package * version date (UTC) lib source

abind 1.4-8 2024-09-12 [1] CRAN (R 4.3.3)

arrayhelpers 1.1-0 2020-02-04 [2] CRAN (R 4.3.0)

backports 1.5.0 2024-05-23 [1] CRAN (R 4.3.3)

bayesplot * 1.10.0 2022-11-16 [2] CRAN (R 4.3.0)

bayestestR 0.16.0 2025-05-20 [1] CRAN (R 4.3.3)

bridgesampling 1.1-2 2021-04-16 [2] CRAN (R 4.3.0)

brms * 2.22.0 2024-09-23 [1] CRAN (R 4.3.3)

Brobdingnag 1.2-9 2022-10-19 [2] CRAN (R 4.3.0)

cachem 1.1.0 2024-05-16 [1] CRAN (R 4.3.3)

callr 3.7.6 2024-03-25 [1] CRAN (R 4.3.1)

checkmate 2.3.2 2024-07-29 [1] CRAN (R 4.3.3)

cli 3.6.5 2025-04-23 [1] CRAN (R 4.3.3)

coda * 0.19-4.1 2024-01-31 [1] CRAN (R 4.3.1)

codetools 0.2-19 2023-02-01 [2] CRAN (R 4.3.3)

colorspace 2.1-1 2024-07-26 [1] CRAN (R 4.3.3)

curl 6.2.2 2025-03-24 [1] CRAN (R 4.3.3)

denstrip 1.5.5 2025-03-25 [1] CRAN (R 4.3.3)

devtools 2.4.5 2022-10-11 [2] CRAN (R 4.3.0)

digest 0.6.37 2024-08-19 [1] CRAN (R 4.3.3)

distributional 0.5.0 2024-09-17 [1] CRAN (R 4.3.3)

dplyr * 1.1.4 2023-11-17 [1] CRAN (R 4.3.1)

ellipsis 0.3.2 2021-04-29 [2] CRAN (R 4.3.0)

emmeans 1.11.0 2025-03-20 [1] CRAN (R 4.3.3)

estimability 1.5.1 2024-05-12 [1] CRAN (R 4.3.3)

evaluate 1.0.3 2025-01-10 [1] CRAN (R 4.3.3)

farver 2.1.2 2024-05-13 [1] CRAN (R 4.3.3)

fastmap 1.2.0 2024-05-15 [1] CRAN (R 4.3.3)

forcats * 1.0.0 2023-01-29 [2] CRAN (R 4.3.0)

fs 1.6.6 2025-04-12 [1] CRAN (R 4.3.3)

generics 0.1.4 2025-05-09 [1] CRAN (R 4.3.3)

GGally 2.2.1 2024-02-14 [1] CRAN (R 4.3.1)

ggdist 3.3.0 2023-05-13 [2] CRAN (R 4.3.0)

ggmcmc * 1.5.1.1 2021-02-10 [1] CRAN (R 4.3.0)

ggplot2 * 3.5.2 2025-04-09 [1] CRAN (R 4.3.3)

ggstats 0.7.0 2024-09-22 [1] CRAN (R 4.3.3)

glue 1.8.0 2024-09-30 [1] CRAN (R 4.3.3)

gridExtra 2.3 2017-09-09 [2] CRAN (R 4.3.0)

gtable 0.3.6 2024-10-25 [1] CRAN (R 4.3.3)

hms 1.1.3 2023-03-21 [2] CRAN (R 4.3.0)

htmltools 0.5.8.1 2024-04-04 [1] CRAN (R 4.3.1)

htmlwidgets 1.6.4 2023-12-06 [1] CRAN (R 4.3.1)

httpuv 1.6.15 2024-03-26 [1] CRAN (R 4.3.1)

inline 0.3.19 2021-05-31 [2] CRAN (R 4.3.0)

insight 1.3.0 2025-05-20 [1] CRAN (R 4.3.3)

invgamma * 1.1 2017-05-07 [2] CRAN (R 4.3.0)

jsonlite 2.0.0 2025-03-27 [1] CRAN (R 4.3.3)

knitr 1.49 2024-11-08 [1] CRAN (R 4.3.3)

labeling 0.4.3 2023-08-29 [1] CRAN (R 4.3.0)

later 1.4.1 2024-11-27 [1] CRAN (R 4.3.3)

lattice 0.22-5 2023-10-24 [2] CRAN (R 4.3.3)

lifecycle 1.0.4 2023-11-07 [1] CRAN (R 4.3.1)

loo 2.8.0 2024-07-03 [1] CRAN (R 4.3.3)

lubridate * 1.9.2 2023-02-10 [2] CRAN (R 4.3.0)

magrittr 2.0.3 2022-03-30 [2] CRAN (R 4.3.0)

Matrix 1.6-5 2024-01-11 [2] CRAN (R 4.3.3)

matrixStats 1.5.0 2025-01-07 [1] CRAN (R 4.3.3)

mcmcplots * 0.4.3 2018-06-22 [1] CRAN (R 4.3.3)

memoise 2.0.1 2021-11-26 [2] CRAN (R 4.3.0)

mime 0.13 2025-03-17 [1] CRAN (R 4.3.3)

miniUI 0.1.1.1 2018-05-18 [2] CRAN (R 4.3.0)

mnormt 2.1.1 2022-09-26 [2] CRAN (R 4.3.0)

mvtnorm 1.3-3 2025-01-10 [1] CRAN (R 4.3.3)

nlme 3.1-164 2023-11-27 [2] CRAN (R 4.3.3)

pillar 1.11.0 2025-07-04 [1] CRAN (R 4.3.3)

pkgbuild 1.4.7 2025-03-24 [1] CRAN (R 4.3.3)

pkgconfig 2.0.3 2019-09-22 [2] CRAN (R 4.3.0)

pkgload 1.4.0 2024-06-28 [1] CRAN (R 4.3.3)

plyr 1.8.9 2023-10-02 [1] CRAN (R 4.3.1)

posterior 1.6.1 2025-02-27 [1] CRAN (R 4.3.3)

processx 3.8.6 2025-02-21 [1] CRAN (R 4.3.3)

profvis 0.3.8 2023-05-02 [2] CRAN (R 4.3.0)

promises 1.3.2 2024-11-28 [1] CRAN (R 4.3.3)

ps 1.9.1 2025-04-12 [1] CRAN (R 4.3.3)

psych * 2.4.12 2024-12-23 [1] CRAN (R 4.3.3)

purrr * 1.0.4 2025-02-05 [1] CRAN (R 4.3.3)

QuickJSR 1.3.1 2024-07-14 [2] CRAN (R 4.3.3)

R6 2.6.1 2025-02-15 [1] CRAN (R 4.3.3)

RColorBrewer 1.1-3 2022-04-03 [2] CRAN (R 4.3.0)

Rcpp * 1.0.14 2025-01-12 [1] CRAN (R 4.3.3)

RcppParallel 5.1.7 2023-02-27 [2] CRAN (R 4.3.0)

readr * 2.1.4 2023-02-10 [2] CRAN (R 4.3.0)

remotes 2.5.0 2024-03-17 [1] CRAN (R 4.3.1)

reshape2 1.4.4 2020-04-09 [2] CRAN (R 4.3.0)

rlang 1.1.6 2025-04-11 [1] CRAN (R 4.3.3)

rmarkdown 2.29 2024-11-04 [1] CRAN (R 4.3.3)

rstan * 2.32.6 2024-03-05 [2] CRAN (R 4.3.1)

rstantools 2.3.1.1 2023-07-18 [2] CRAN (R 4.3.0)

rstudioapi 0.17.1 2024-10-22 [1] CRAN (R 4.3.3)

scales 1.4.0 2025-04-24 [1] CRAN (R 4.3.3)

sessioninfo 1.2.2 2021-12-06 [2] CRAN (R 4.3.0)

sfsmisc 1.1-16 2023-08-10 [2] CRAN (R 4.3.0)

shiny 1.9.1 2024-08-01 [1] CRAN (R 4.3.3)

StanHeaders * 2.32.10 2024-07-15 [1] CRAN (R 4.3.3)

stringi 1.8.7 2025-03-27 [1] CRAN (R 4.3.3)

stringr * 1.5.1 2023-11-14 [1] CRAN (R 4.3.1)

svUnit 1.0.6 2021-04-19 [2] CRAN (R 4.3.0)

tensorA 0.36.2.1 2023-12-13 [1] CRAN (R 4.3.1)

tibble * 3.3.0 2025-06-08 [1] CRAN (R 4.3.3)

tidybayes * 3.0.6 2023-08-12 [2] CRAN (R 4.3.0)

tidyr * 1.3.1 2024-01-24 [1] CRAN (R 4.3.1)

tidyselect 1.2.1 2024-03-11 [1] CRAN (R 4.3.1)

tidyverse * 2.0.0 2023-02-22 [1] CRAN (R 4.3.0)

timechange 0.2.0 2023-01-11 [2] CRAN (R 4.3.0)

tzdb 0.4.0 2023-05-12 [2] CRAN (R 4.3.0)

urlchecker 1.0.1 2021-11-30 [2] CRAN (R 4.3.0)

usethis 2.2.2 2023-07-06 [2] CRAN (R 4.3.0)

V8 6.0.0 2024-10-12 [1] CRAN (R 4.3.3)

vctrs 0.6.5 2023-12-01 [1] CRAN (R 4.3.1)

viridisLite 0.4.2 2023-05-02 [2] CRAN (R 4.3.0)

withr 3.0.2 2024-10-28 [1] CRAN (R 4.3.3)

xfun 0.49 2024-10-31 [1] CRAN (R 4.3.3)

xtable 1.8-4 2019-04-21 [2] CRAN (R 4.3.0)

yaml 2.3.10 2024-07-26 [1] CRAN (R 4.3.3)

[1] /Users/3659690/Library/R/arm64/4.3/library

[2] /Library/Frameworks/R.framework/Versions/4.3-arm64/Resources/library

──────────────────────────────────────────────────────────────────────────────