Animal Sounds

Detecting primate vocalizations in a jungle of audio recordings

Background

Biodiversity monitoring in tropical rainforests in relation to wood logging certification

Bioacoustic monitoring:

- is a non-invasive method to monitor biodiversity

- dense canopy makes visual monitoring difficult

Background

Applied Data Science seed grant for interdisciplinary collaboration

Team:

- Researcher biology

- 2 biology students

- Researcher in human speech recognition

- Research Engineers (3x)

Challenges and objectives

- Data processing requires automation

- Machine learning requires labeled data

- Low vocalization density

- Training data is not available

- Noisy environment

Challenges and objectives

- Can we use data from a zoo to train a model, and detect vocalizations in the wild?

- If so > create a pipeline to automate the process for reuse in other projects

Training data

| Species | # vocalizations | example |

|---|---|---|

| Chimpanzee | 1190 | |

| Guenon | 554 | |

| Mandrill | 2717 | |

| Red Capped Mangabey | 584 |

Creating synthetic data

“We need more”

Combine vocalizations with jungle noise

Dampen the vocalizations to simulate distance

- 0 dB

- -3.3 dB

- -6.6 dB

- -10 dB

For each segment, 4 new segments are created

Training and testing classifiers

How to test the classifiers?

- cross-validation

- need for an independent test set

- super low density of vocalizations in the wild

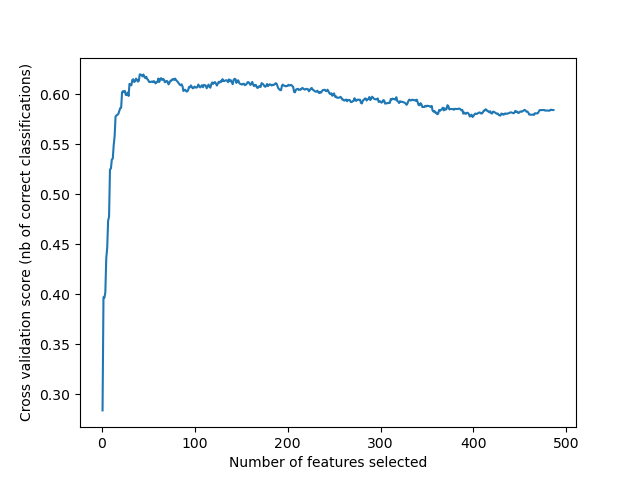

Classical machine learning

Feature extraction inspired by human speech recognition

Scikit-learn: Feature selection

Determining number of features to select with RFE

Scikit-learn: SVM

Using

feature_importancesmethod in ExtraTreesClassifier to select 50 most important featuresTrain SVM model with selected features

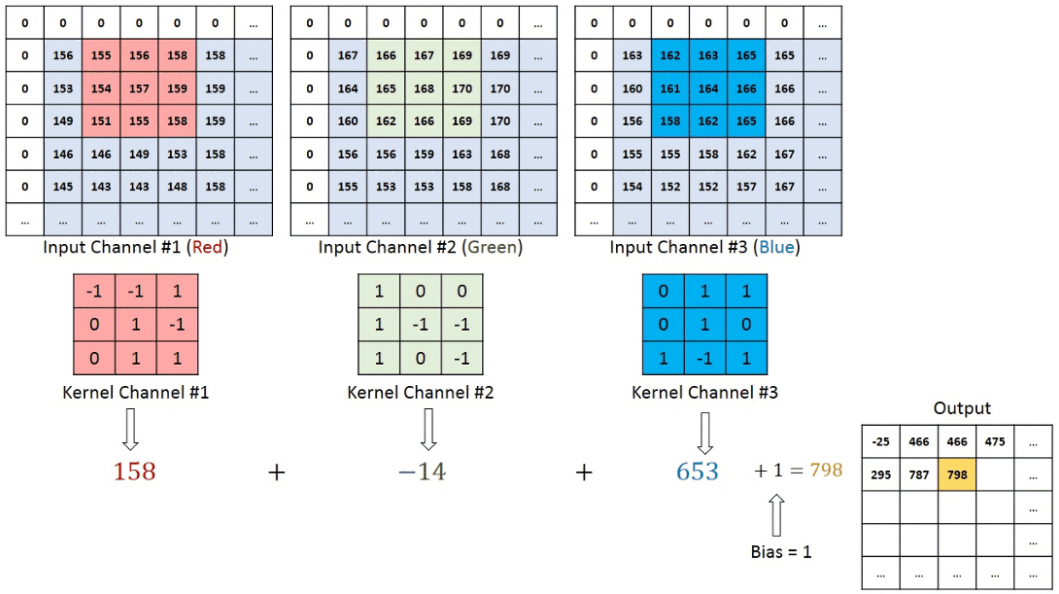

Deep learning Models

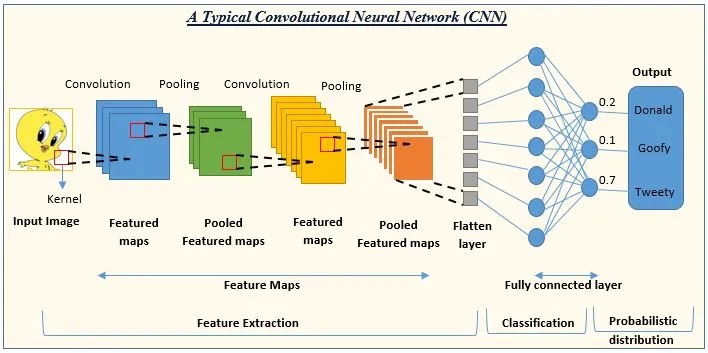

Convolutional Neural Networks (CNN)

- Convolutional layer

- Detects features e.g., edges, textures, by applying filters

- Pooling layer:

- Reduces the dimensionality of feature maps

- Fully Connected layer

- maps the features to the final output

How does CNN work?

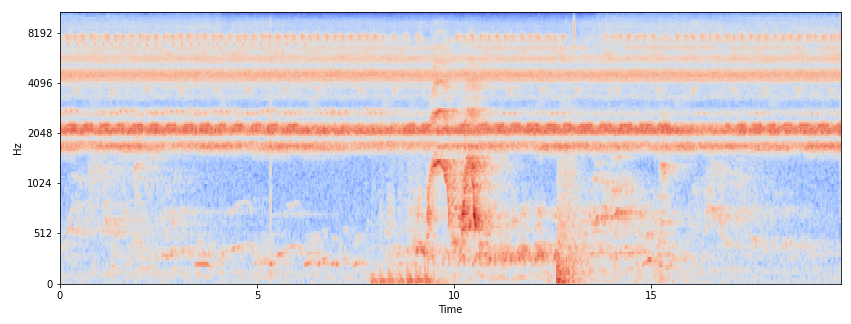

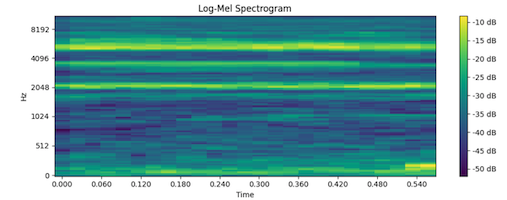

How doe we change audio to image?

Spectrogram - represents the intensity of different frequencies as they change over time, typically using a color map

Log-mel-spectrogram a variation of the standard spectrogram that applies a filter bank and a log function on top of it.

making quieter sounds more detectable.

Aligns the representation with human auditory perception

Normalizes the features

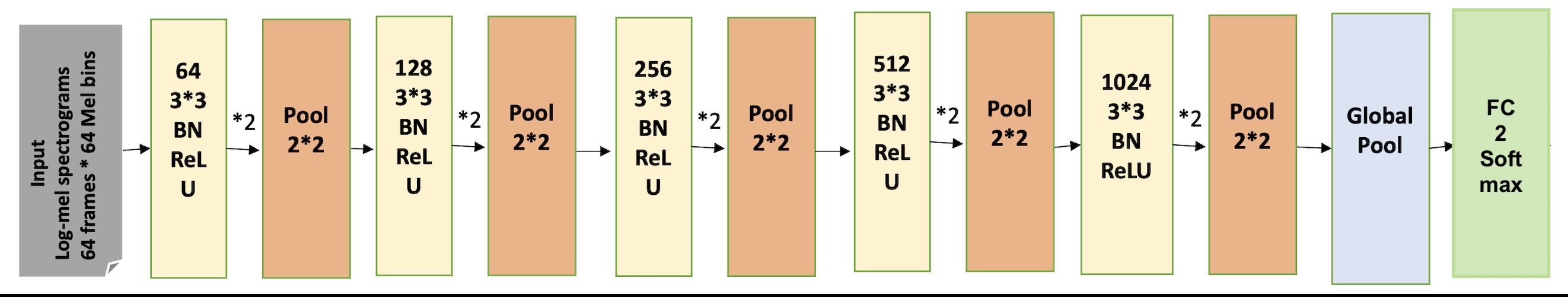

Model Architecture - Derived from PANNs

PANNs: Large-Scale Pretrained Audio Neural Networks for Audio Pattern Recognition

designed for audio event detection and classification

a combination of convolutional blocks and pooling operations

Results

| Trained on | SVM | CNN | CNN10 |

|---|---|---|---|

| Sanctuary | 0.86 | 0.81 | 0.83 |

| Synthetic | 0.65 | 0.82 | 0.85 |

| Sanctuary + Synthetic | 0.87 | 0.83 | 0.87 |

Numbers represent: Unweighted Average Recall (UAR)

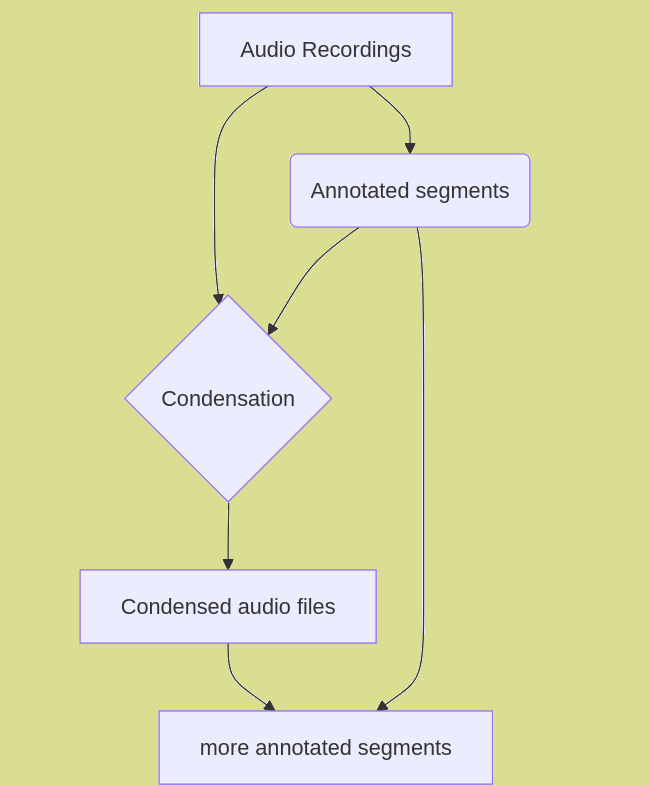

Deliverables (1/2)

Python modules for audio analysis

- Preprocessing

- Condensation for speeding up annotation

- Extracting relevant audio segments

- Generate synthetic data

- Feature extraction and selection

- Classification

- SVM

- Deep learning models

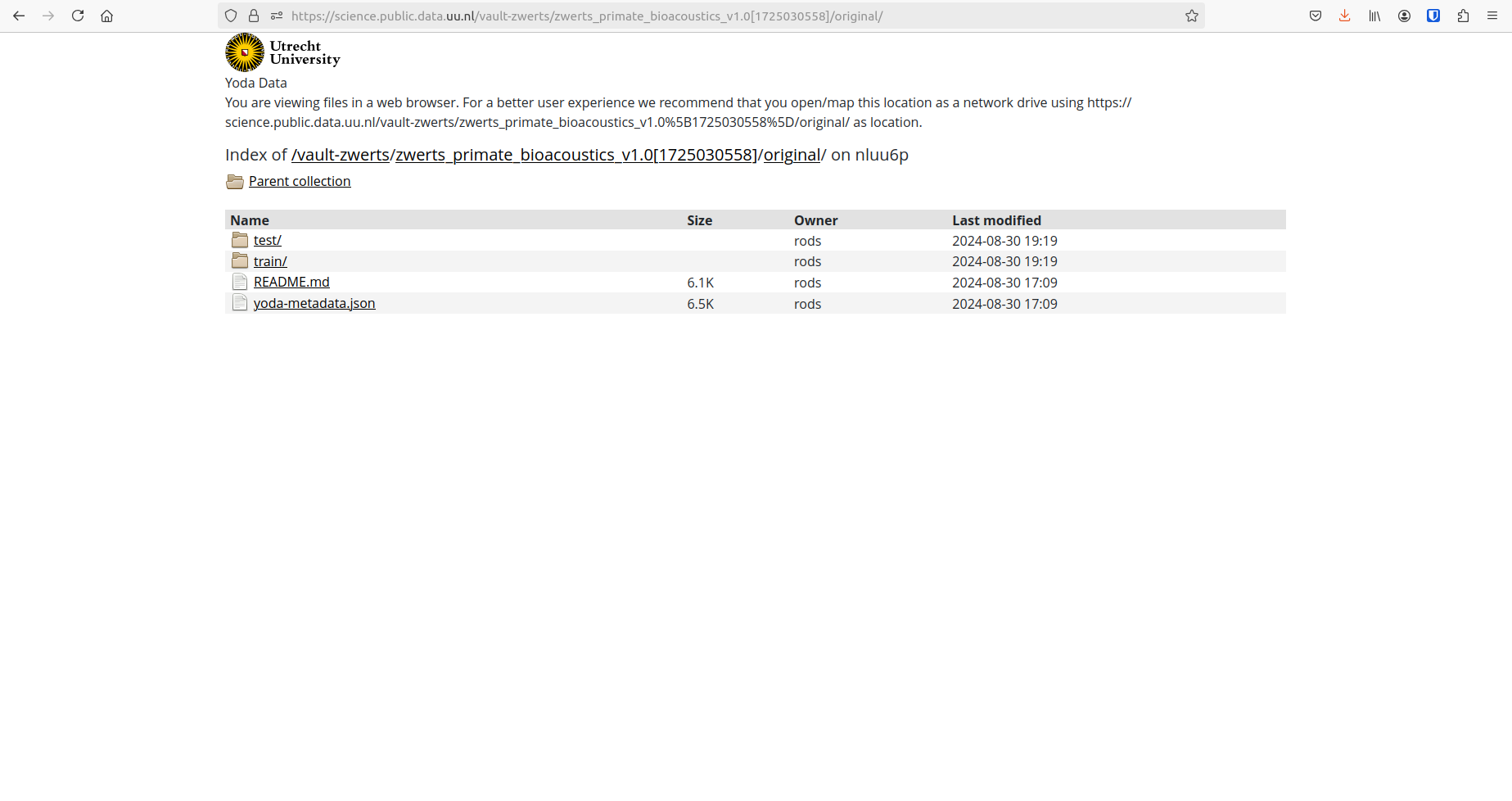

Deliverables (2/2)

Public train and test data

Challenges & Learning points

- Collaboration with ML audio expert

- Involvement researcher

- Data management

- Code management (Matlab scripts, repo’s with playground folders, no real git workflow, documentation, package from early stage)

- Interspeech challenge

Future work

Publication

Generic Audio Analysis Platform

- Modular Architecture

Parisa Zahedi & Jelle Treep